分类预测 | Matlab实现DRN深度残差网络数据分类预测

分类预测 | Matlab实现DRN深度残差网络数据分类预测

目录

- 分类预测 | Matlab实现DRN深度残差网络数据分类预测

- 分类效果

- 基本介绍

- 程序设计

- 参考资料

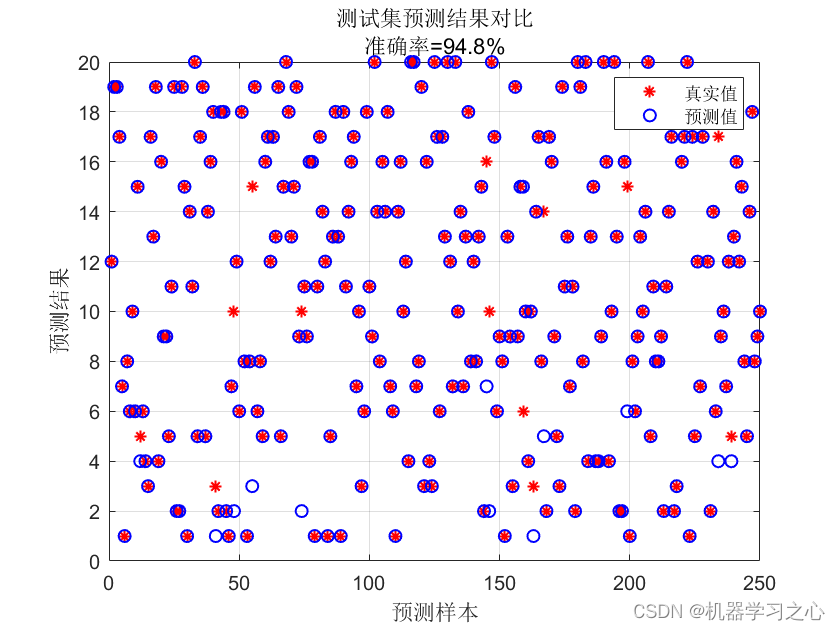

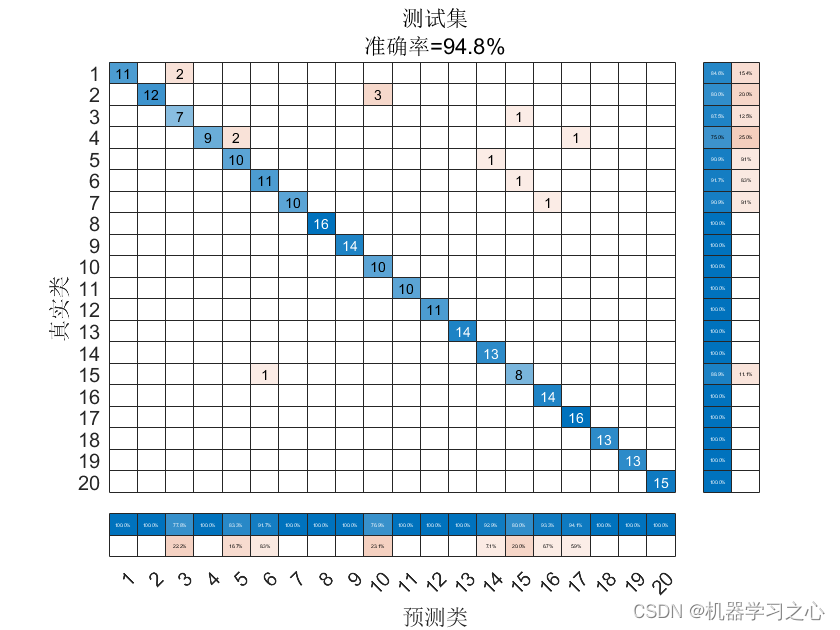

分类效果

基本介绍

1.Matlab实现DRN深度残差网络数据分类预测(完整源码和数据),运行环境为Matlab2023及以上。

2.多特征输入单输出的二分类及多分类模型。程序内注释详细,直接替换excel数据就可以用;

3.程序语言为matlab,程序可出分类效果图,迭代优化图,混淆矩阵图。

4.代码特点:参数化编程、参数可方便更改、代码编程思路清晰、注释明细。

程序设计

- 完整程序和数据获取方式资源处直接下载Matlab实现DRN深度残差网络数据分类预测(完整源码和数据)。

%% 清空环境变量 warning off % 关闭报警信息 close all % 关闭开启的图窗 clear % 清空变量 clc % 清空命令行 lgraph = layerGraph(); %% Add Layer Branches % Add the branches of the network to the layer graph. Each branch is a linear % array of layers. tempLayers = [ imageInputLayer(inputshape,"Name","input") convolution2dLayer([7 7],64,"Name","conv1","Padding",[3 3 3 3],"Stride",[2 2]) batchNormalizationLayer("Name","bn_conv1","Epsilon",0.001) reluLayer("Name","activation_1_relu") maxPooling2dLayer([3 3],"Name","max_pooling2d_1","Padding",[1 1 1 1],"Stride",[2 2])]; lgraph = addLayers(lgraph,tempLayers); tempLayers = [ convolution2dLayer([1 1],256,"Name","res2a_branch1","BiasLearnRateFactor",0) batchNormalizationLayer("Name","bn2a_branch1","Epsilon",0.001)]; lgraph = addLayers(lgraph,tempLayers); tempLayers = [ convolution2dLayer([1 1],64,"Name","res2a_branch2a","BiasLearnRateFactor",0) batchNormalizationLayer("Name","bn2a_branch2a","Epsilon",0.001) reluLayer("Name","activation_2_relu") convolution2dLayer([3 3],64,"Name","res2a_branch2b","BiasLearnRateFactor",0,"Padding","same") batchNormalizationLayer("Name","bn2a_branch2b","Epsilon",0.001) reluLayer("Name","activation_3_relu") convolution2dLayer([1 1],256,"Name","res2a_branch2c","BiasLearnRateFactor",0) batchNormalizationLayer("Name","bn2a_branch2c","Epsilon",0.001)]; lgraph = addLayers(lgraph,tempLayers); tempLayers = [ additionLayer(2,"Name","add_1") reluLayer("Name","activation_4_relu")]; lgraph = addLayers(lgraph,tempLayers);参考资料

[1] http://t.csdn.cn/pCWSp

[2] https://download.csdn.net/download/kjm13182345320/87568090?spm=1001.2014.3001.5501

[3] https://blog.csdn.net/kjm13182345320/article/details/129433463?spm=1001.2014.3001.5501

- 完整程序和数据获取方式资源处直接下载Matlab实现DRN深度残差网络数据分类预测(完整源码和数据)。

免责声明:我们致力于保护作者版权,注重分享,被刊用文章因无法核实真实出处,未能及时与作者取得联系,或有版权异议的,请联系管理员,我们会立即处理! 部分文章是来自自研大数据AI进行生成,内容摘自(百度百科,百度知道,头条百科,中国民法典,刑法,牛津词典,新华词典,汉语词典,国家院校,科普平台)等数据,内容仅供学习参考,不准确地方联系删除处理! 图片声明:本站部分配图来自人工智能系统AI生成,觅知网授权图片,PxHere摄影无版权图库和百度,360,搜狗等多加搜索引擎自动关键词搜索配图,如有侵权的图片,请第一时间联系我们,邮箱:ciyunidc@ciyunshuju.com。本站只作为美观性配图使用,无任何非法侵犯第三方意图,一切解释权归图片著作权方,本站不承担任何责任。如有恶意碰瓷者,必当奉陪到底严惩不贷!