Unity Render Streaming 云渲染企业项目解决方案

文章目录

- 前言

- 效果展示

- 打开场景

- RenderStreaming下载

- Web服务器

- 1.Server下载,Node.js安装

- 2.Server启动

- Unity项目设置

- 1.安装RenderStreaming

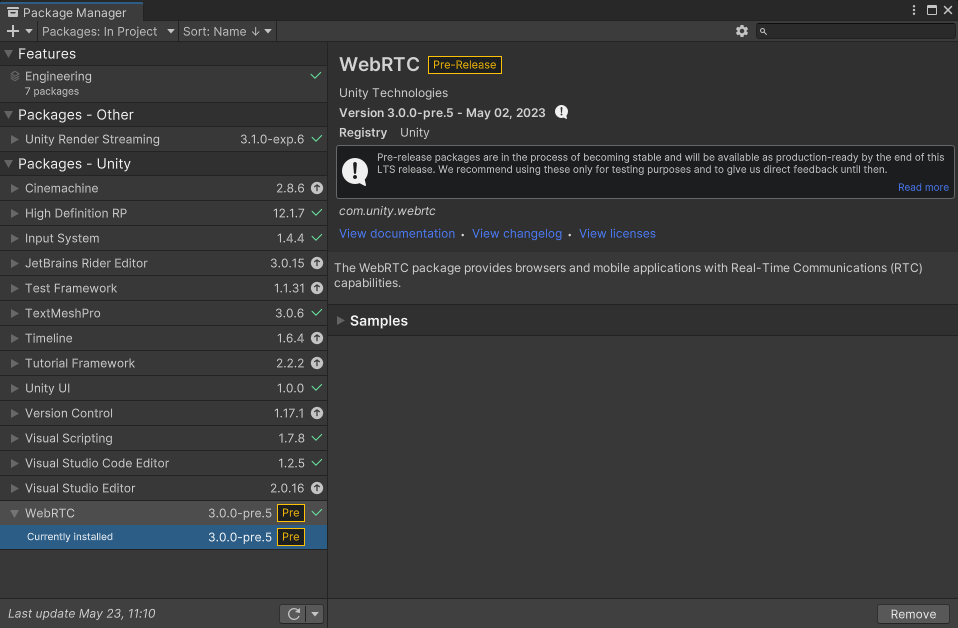

- 2.安装WebRTC

- 3.RenderStreaming设置

- 4.音频传输(根据自己需求添加)

- 5.Unity启动测试

- 交互

- 1.场景交互设置

- 2.键鼠交互

- 3.UI交互(先修改这一点,需要写一些代码,下面参考安卓IOS里的内容)

- 外网服务器部署

- 自定义WebServer

- Web和Unity相互发自定义消息

- 1.Unity向Web发送消息

- 2.web向Unity发送消息

- 企业项目如何应用

- 1.外网访问清晰和流畅之间的抉择

- 2.web制作按钮来替换Unity的按钮操作

- 3.分辨率同步,参考下面iOS、Android中的分辨率同步方式

- 4.手机端访问默认竖屏显示横屏画面注意事项

- 5.检测Unity端是否断开服务

- 6.获取是否有用户连接成功

- iOS、Android端如何制作

- 1.参考Sample中的Receiver场景

- 2.自定义Receiver场景内容

- 3.创建管理脚本

- 4.客户端收到ScreenMessage后的屏幕自适应处理

- 5.发布前的项目设置

- 6.发布测试

- 已知问题

前言

本文地址

UnityRenderStreaming官方文档

Unity:2021.3.8f1c1

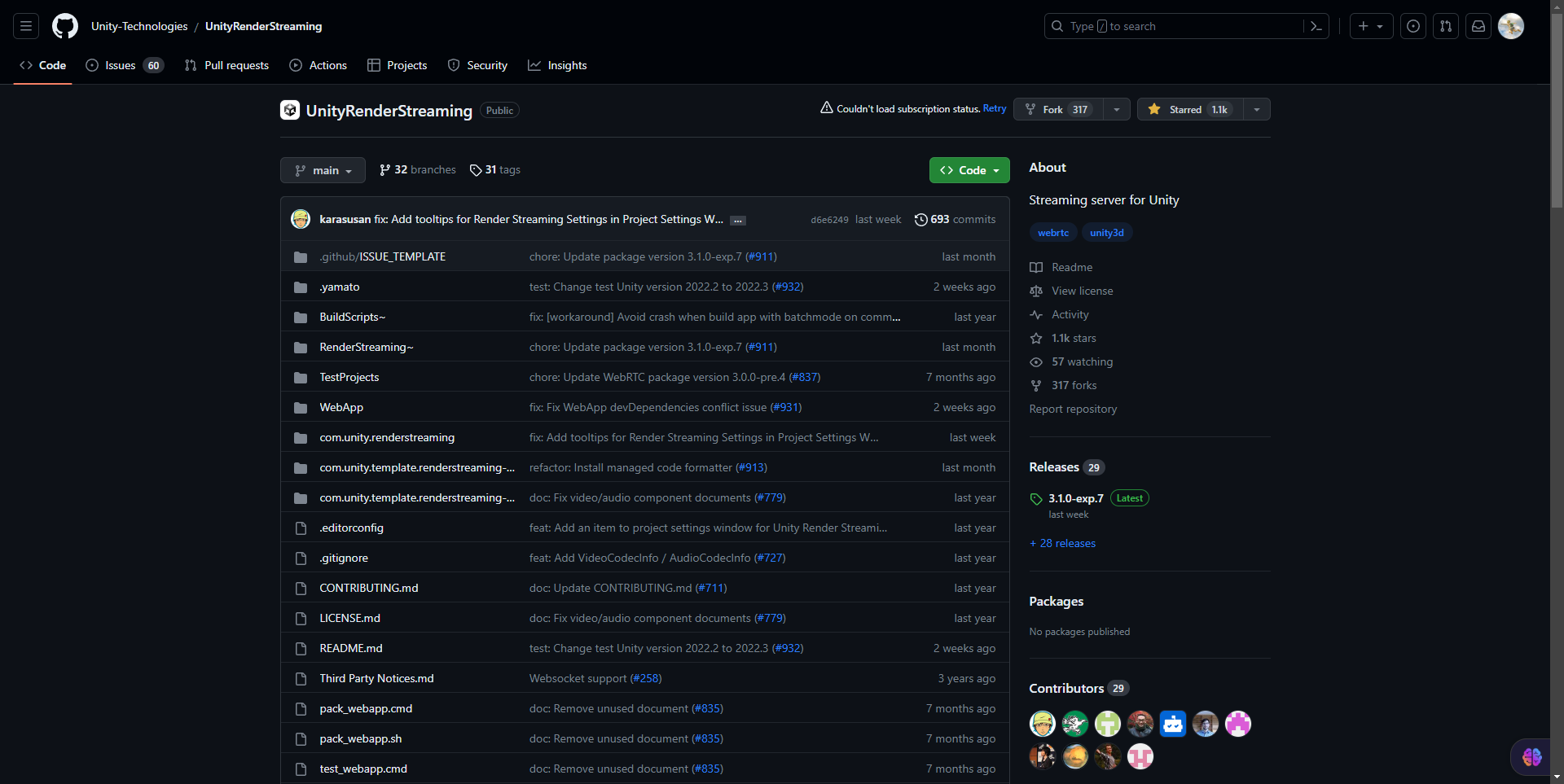

RenderStreaming:3.1.0-exp.7(Latest)

RenderStreaming WebServer:3.1.0-exp.7(Latest)

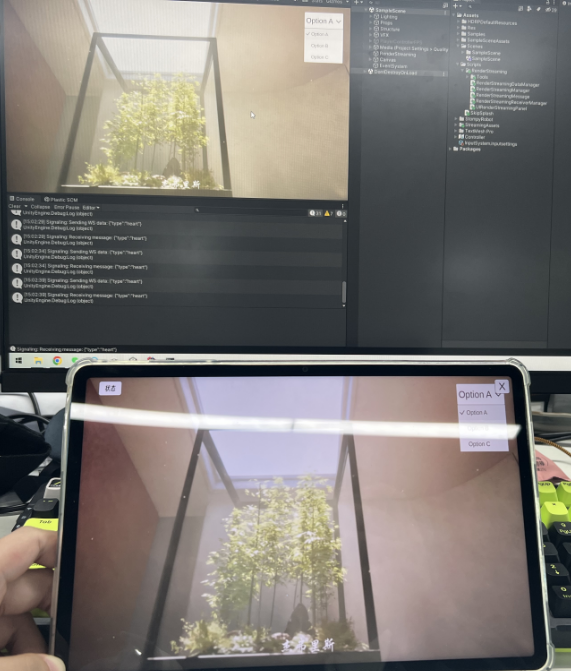

效果展示

UnityRenderStreaming

外网效果

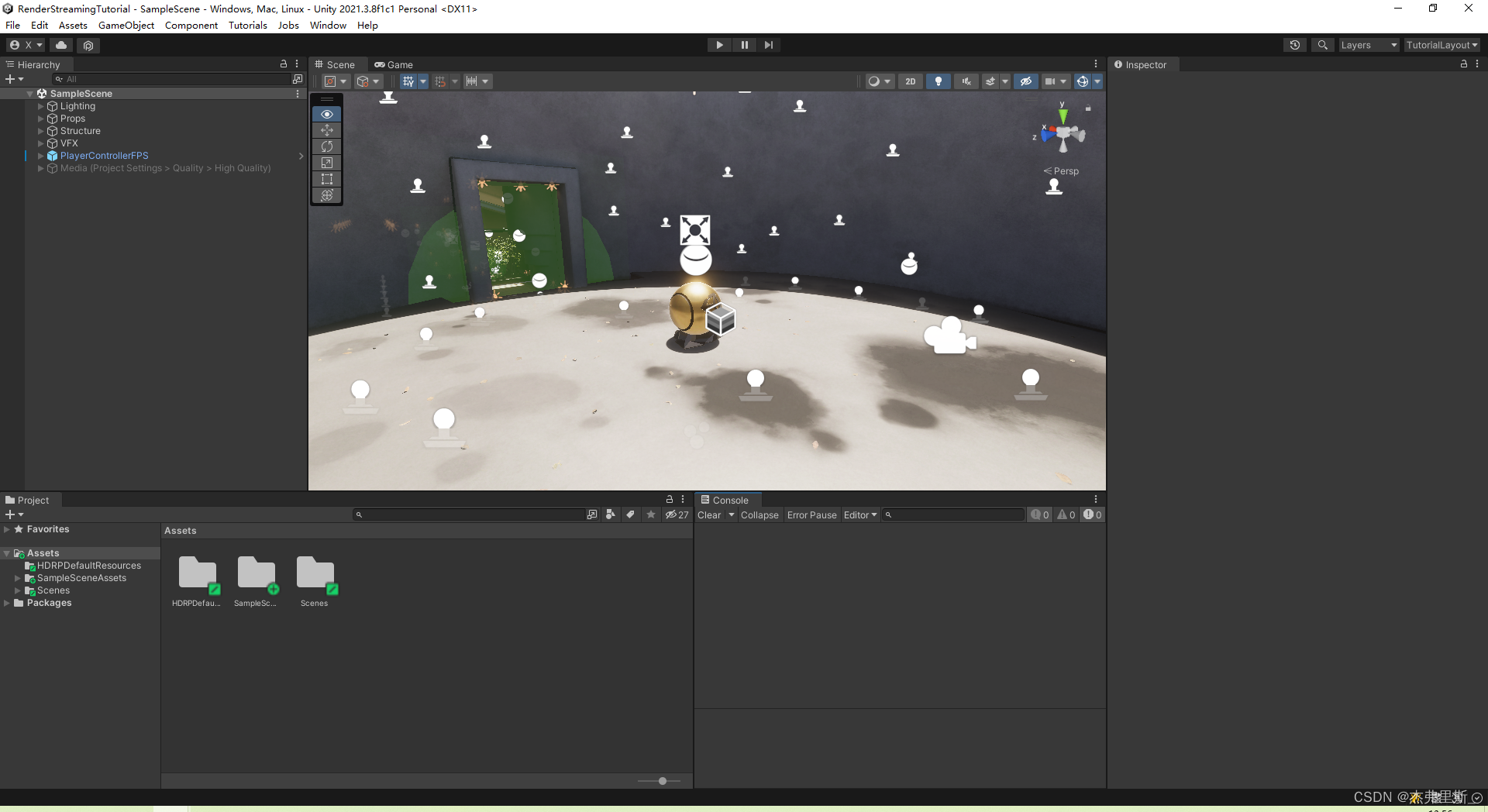

打开场景

使用HDRP示例模板作为测试场景

RenderStreaming下载

Git地址: UnityRenderStreaming

Web服务器

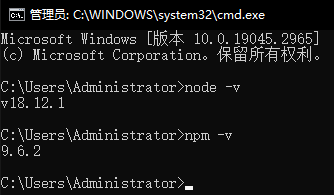

1.Server下载,Node.js安装

根据自己的平台选择Server,需要安装Node.js下载

使用node -v npm -v检查是否安装成功

2.Server启动

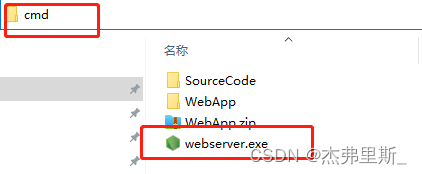

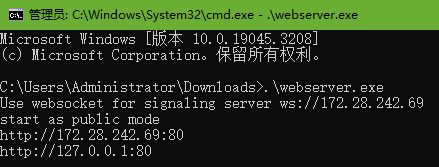

Windows启动方式如下(其他平台启动方式参考官方文档)

找到webserver.exe,地址栏中输入cmd,按下Enter

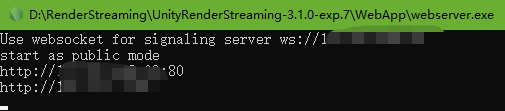

双击或者输入.\webserver.exe,以WebSocket方式启动

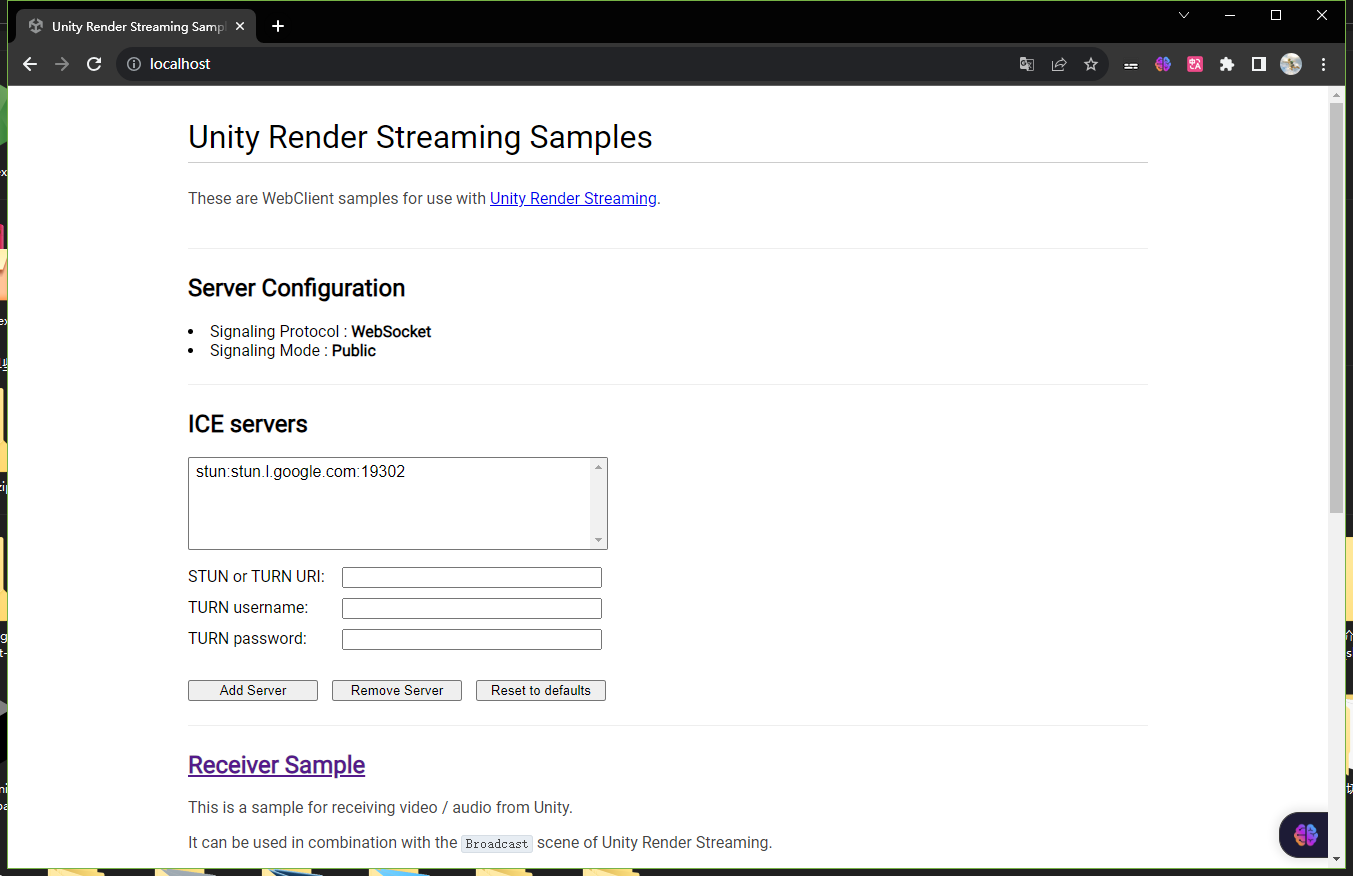

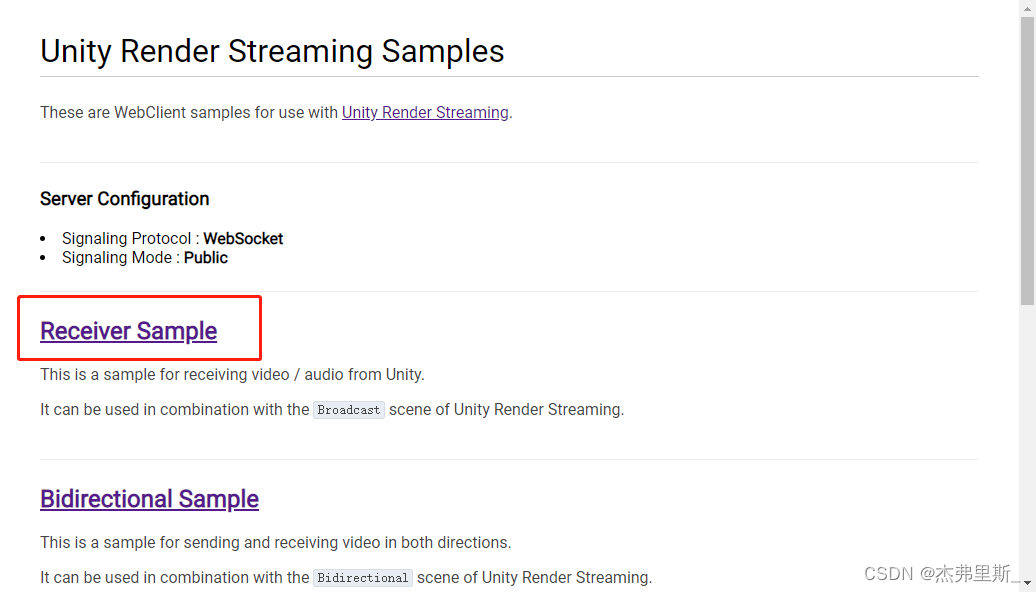

打开浏览器,输入本机IP,下图就是以WebSocket启动后的内容

Unity项目设置

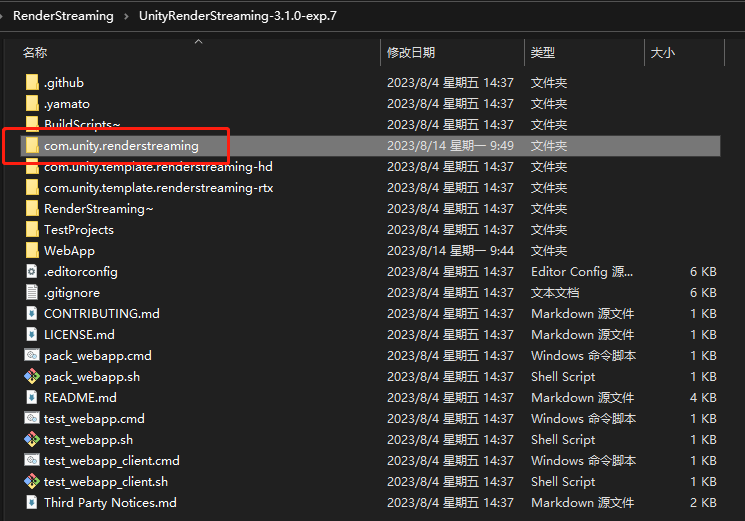

1.安装RenderStreaming

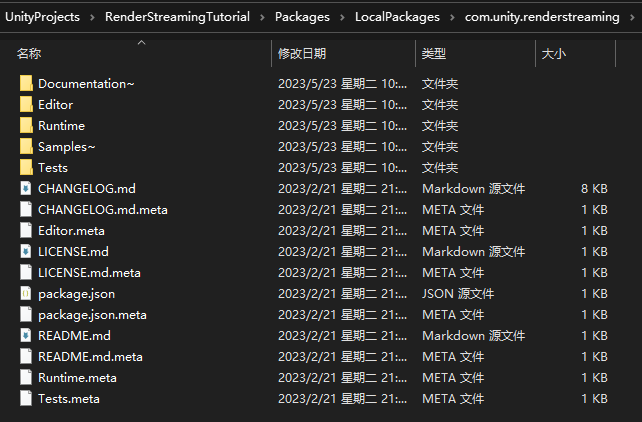

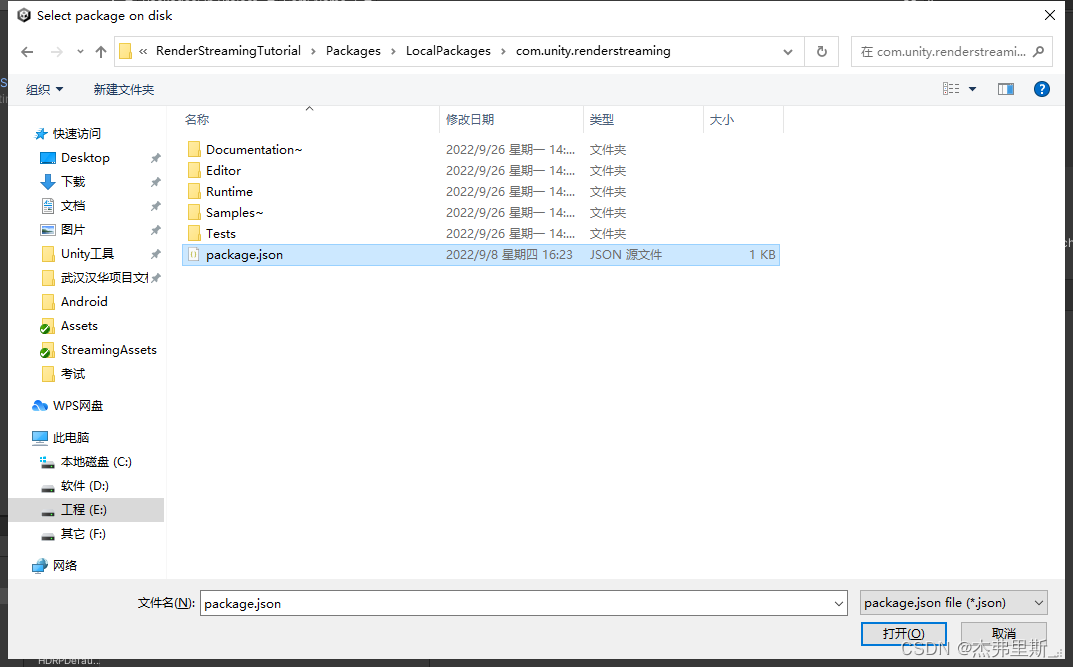

源码解压后,复制com.unity.renderstreaming文件,我这边放在了示例项目的Packages下,新建一个文件夹内。(根据自己的喜好存放)

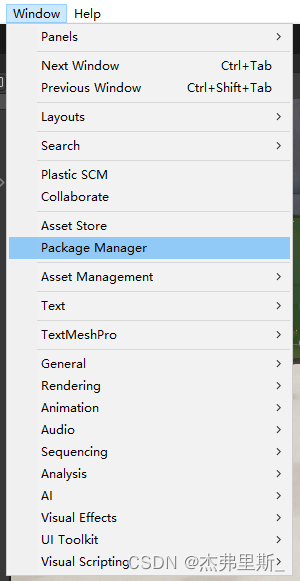

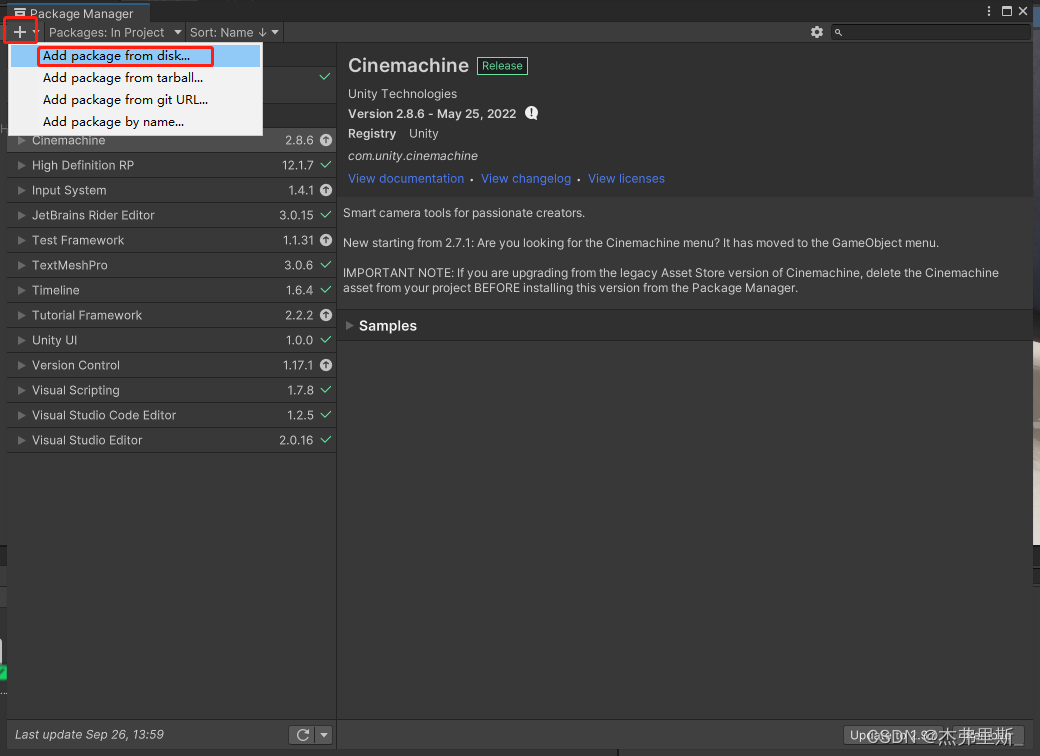

打开PackageManager

点击+ ,选择Add package from disk

找到刚才的文件中package.json,打开

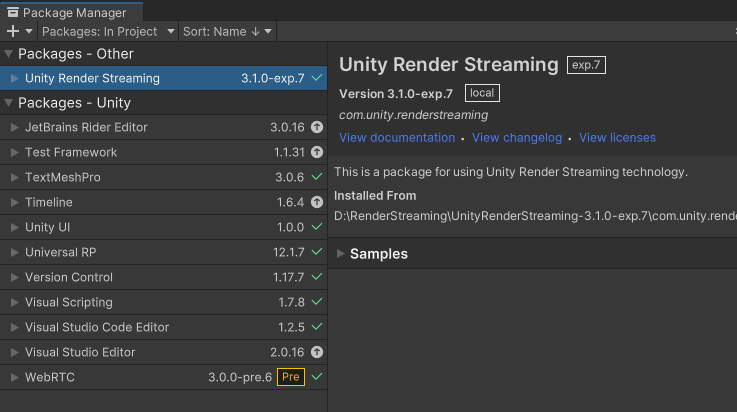

安装后如下

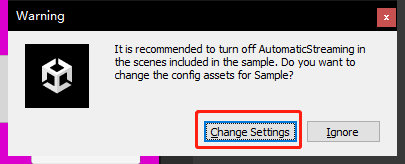

选择ChangeSettings

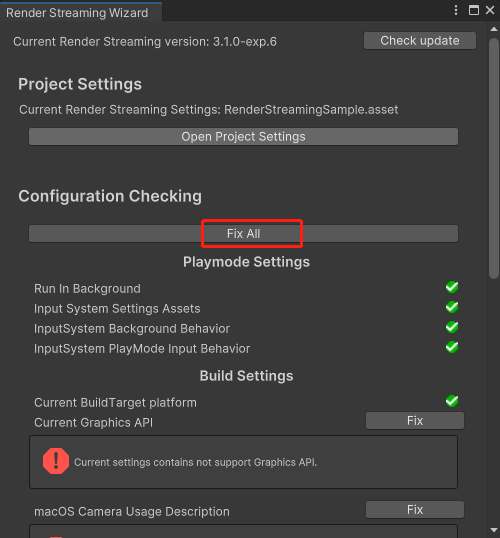

选择FixAll

2.安装WebRTC

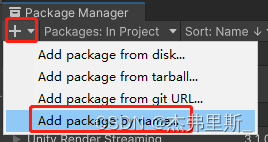

选择Add package by name

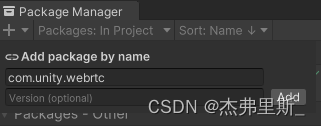

输入com.unity.webrtc,点击Add

安装后如下:

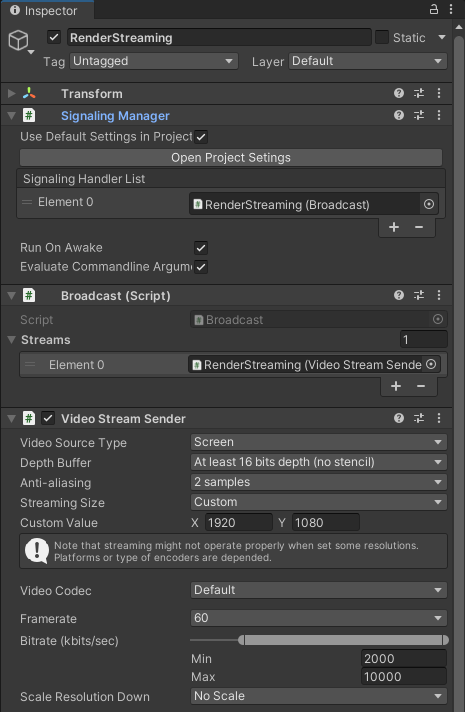

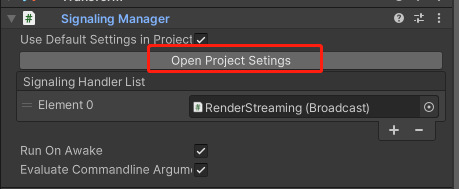

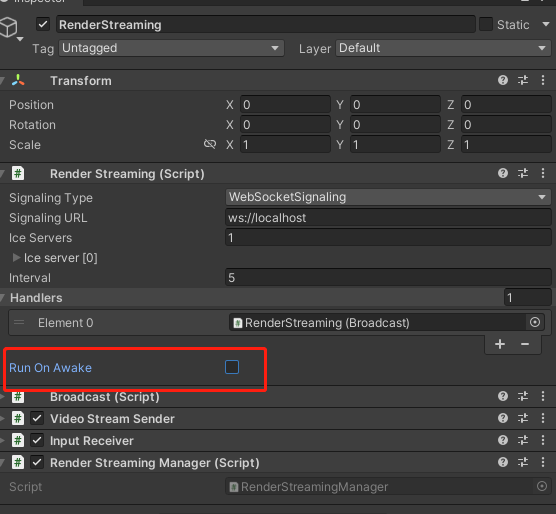

3.RenderStreaming设置

创建空物体,起名RenderStreaming

添加SignalingManager、Broadcast、 VideoStreamSender组件

修改各项参数如下:

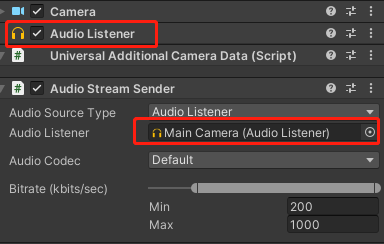

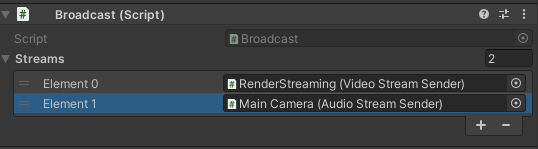

4.音频传输(根据自己需求添加)

添加AudioStreamSender组件在AudioListener上,这个组件必须要在一个物体

Broadcast组件记得添加AudioStreamSender组件

5.Unity启动测试

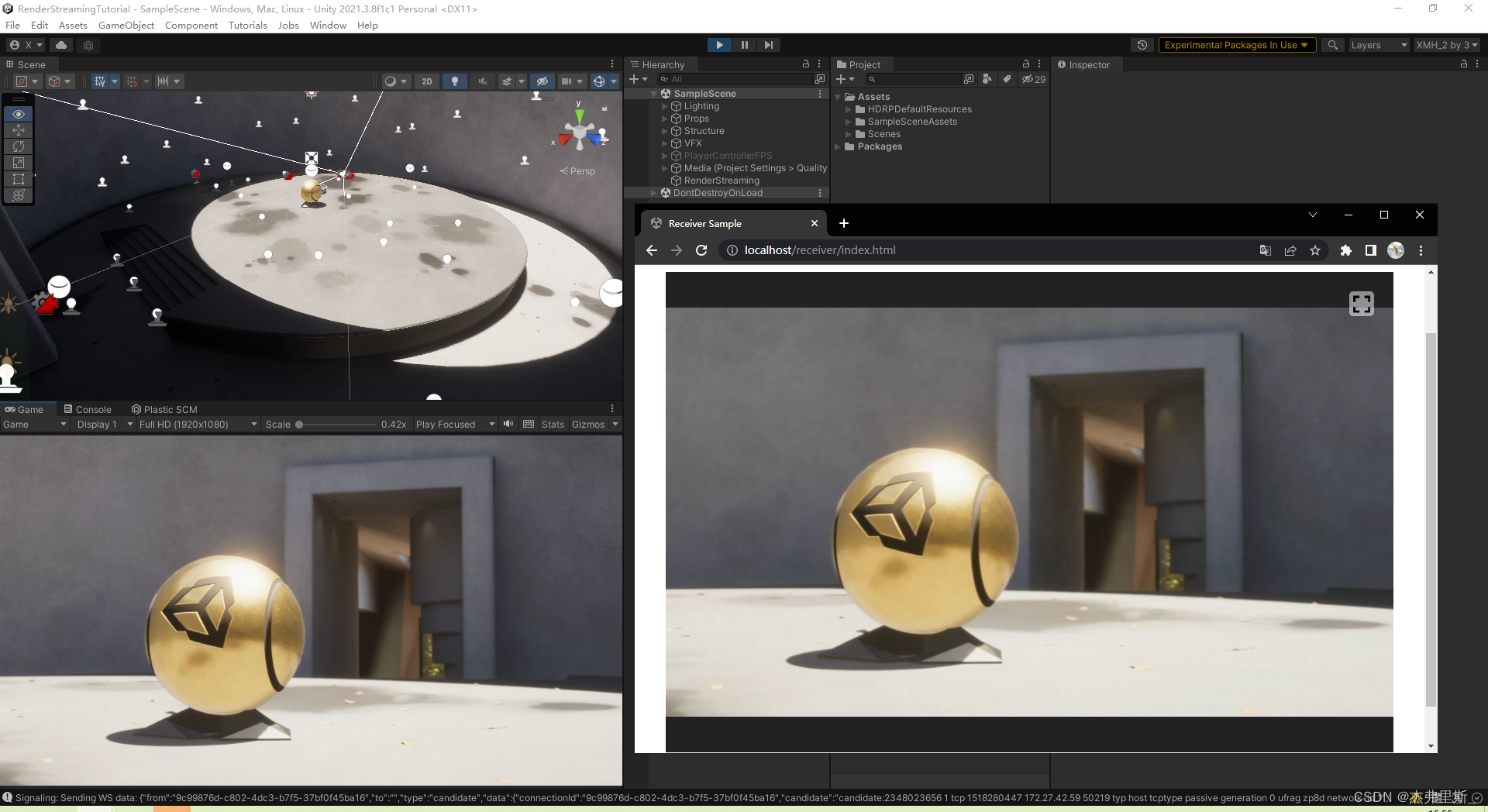

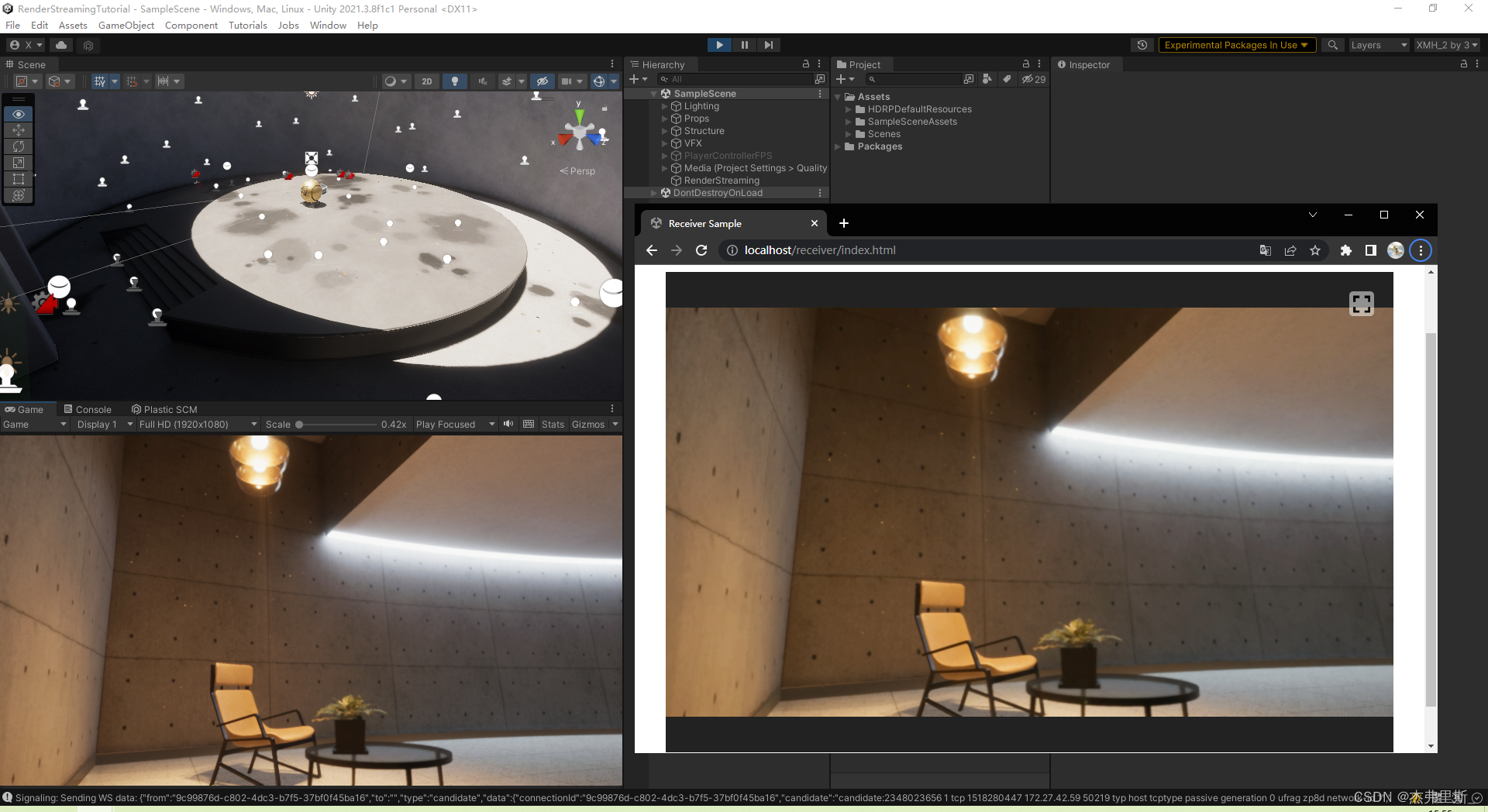

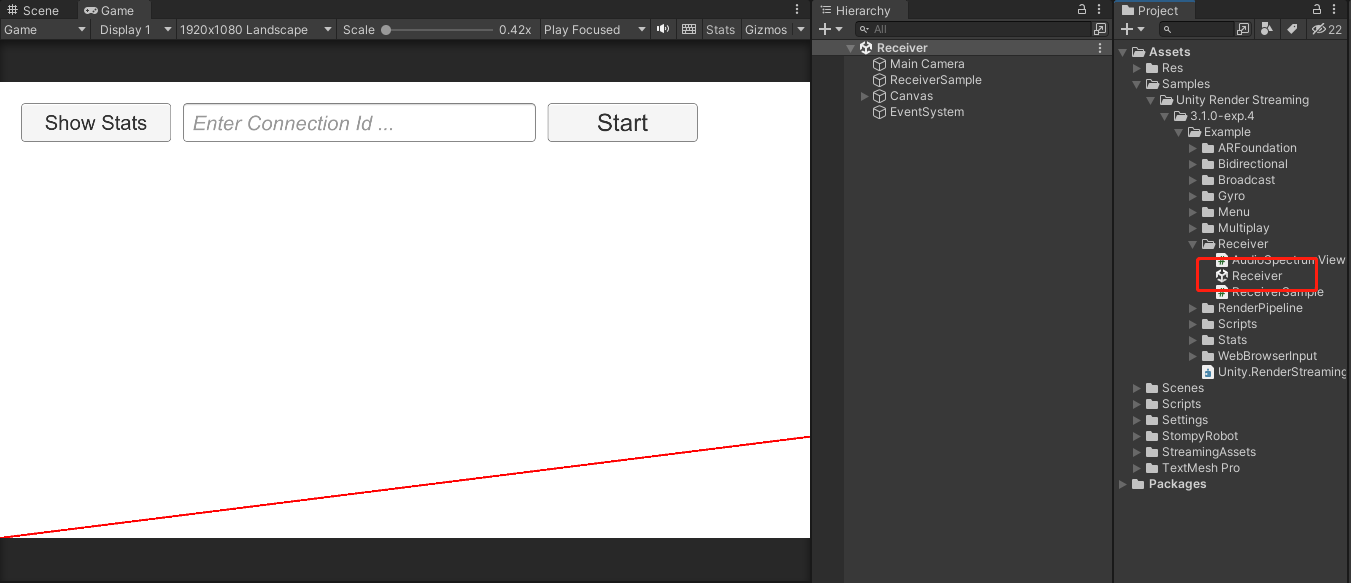

选择ReceiverSample

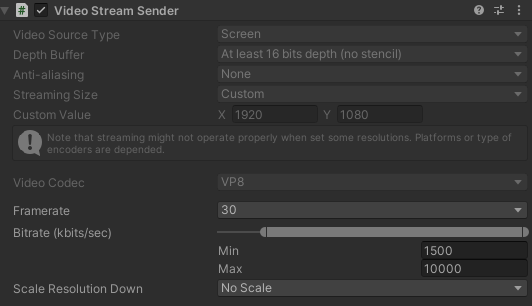

效果如下,可根据自己的需求去调整VideoStreamSender中的各项参数

交互

1.场景交互设置

官方文档参考

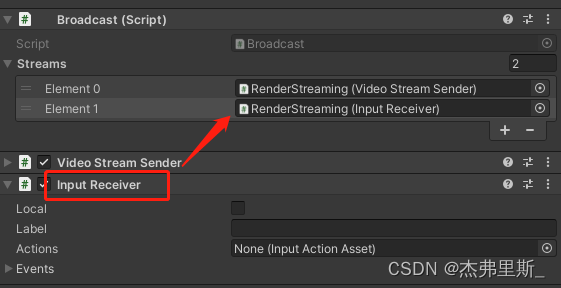

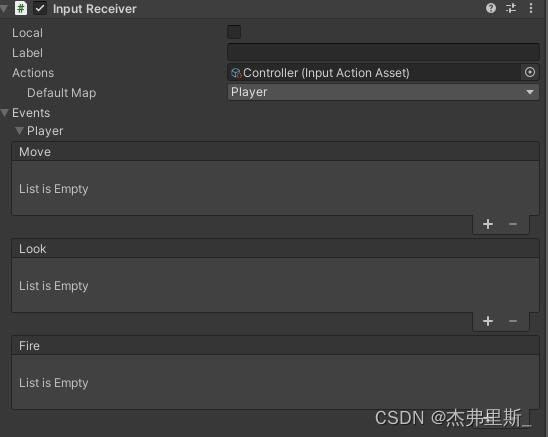

添加InputReceiver组件,并添加到Broadcast中

2.键鼠交互

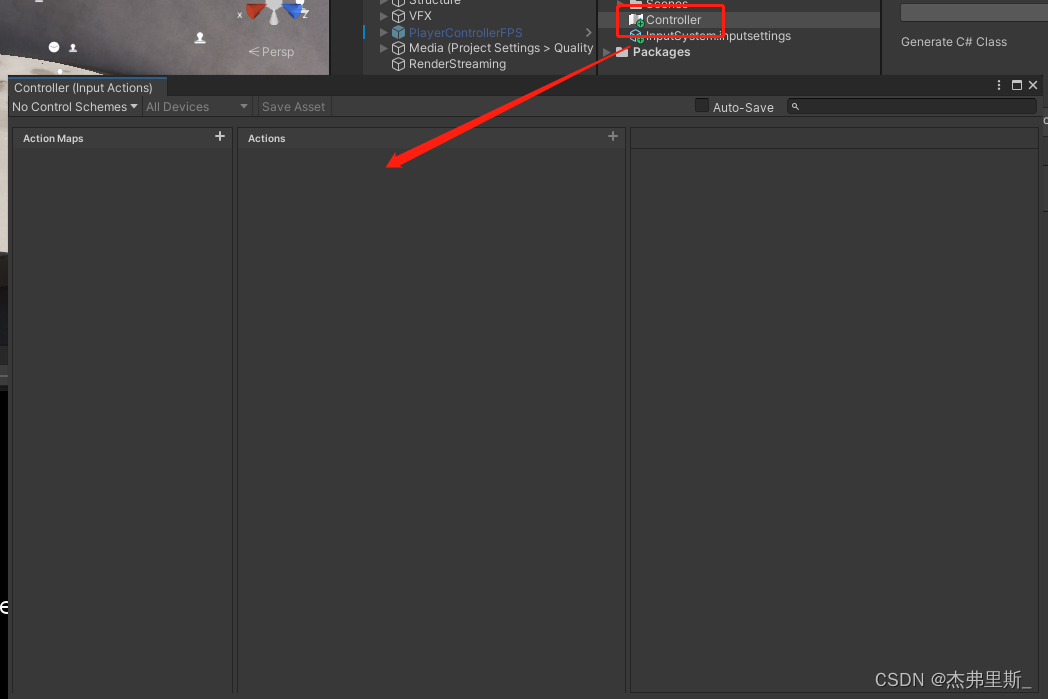

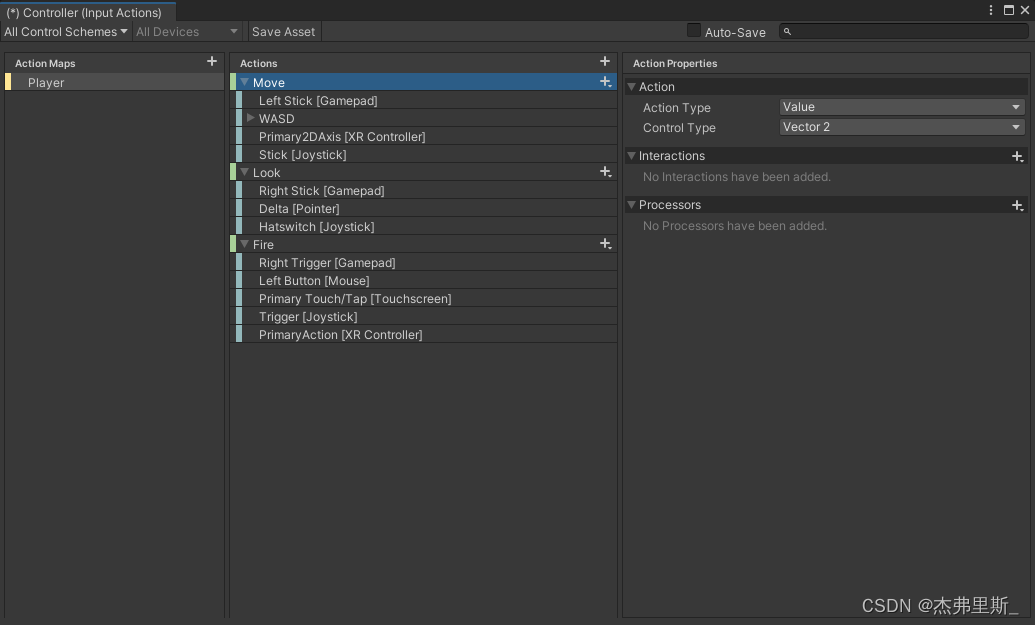

创建InputActions后打开,操作:Create/InputActions

根据自己的项目需求配置Actions

将配置好的额InputActions文件拖入InputReceiver/Actions中,展开Events,可以看到配置的Action对应的事件,根据自己的需求去绑定

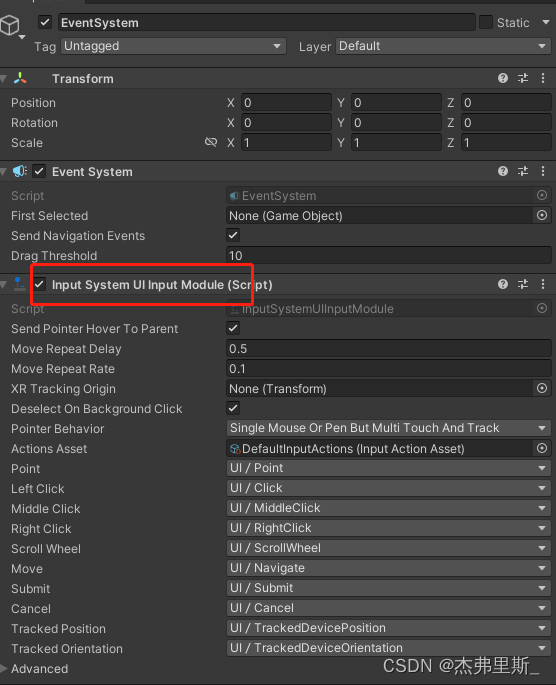

3.UI交互(先修改这一点,需要写一些代码,下面参考安卓IOS里的内容)

EventSystem使用InputSystemUIInputModule

外网服务器部署

对于大多数 WebRTC 应用,服务器都需要在对等设备之间中继流量,因为在客户端之间通常无法实现直接套接字(除非这些应用在同一本地网络中)。解决此问题的常见方法是使用TURN 服务器。术语表示使用中继 NAT 的遍历,是一种中继网络流量的协议。

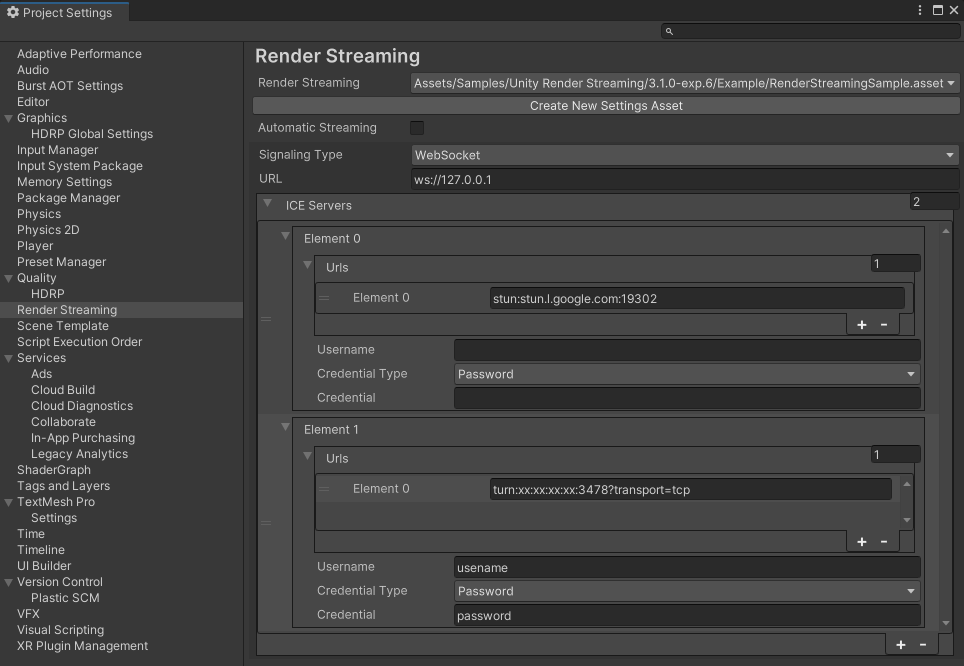

参考Unity文档中TURN 服务器设置

TURN服务器使用的端口需要公开,可设置最大最小值

协议 端口 TCP 32355-65535、3478-3479 UDP 32355-65535、3478-3479 Web端中更改config.js文件中的config.iceServers

config.iceServers = [{ urls: ['stun:stun.l.google.com:19302'] }, { urls: ['turn:xx.xx.xx.xx:3478?transport=tcp'], username: 'username', credential: 'password' } ];Unity中修改RenderStreaming中Ice Server

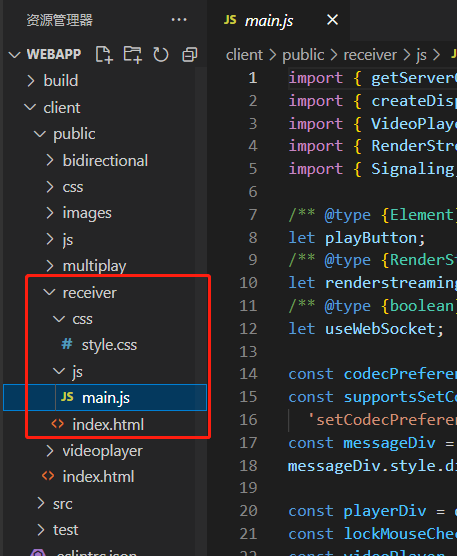

自定义WebServer

下面是如何制作自己的WebServer,更多详情查看官方文档

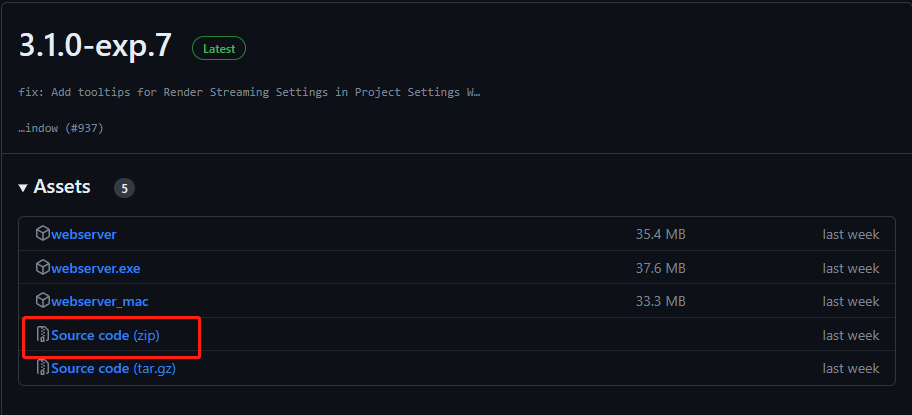

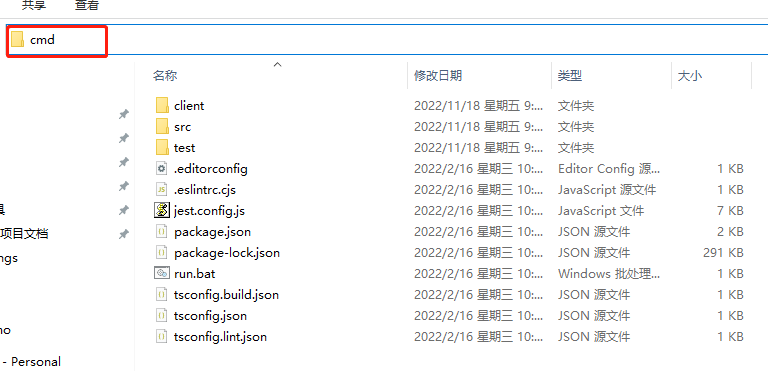

1.下载源码

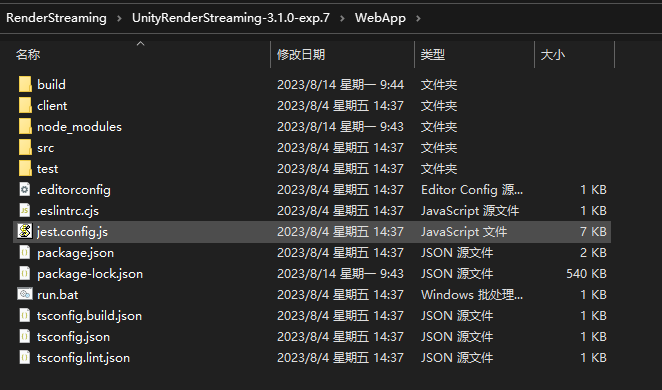

2.解压后找到WebApp

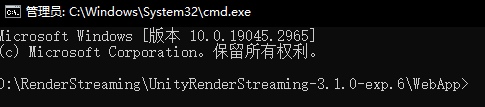

3.地址栏中输入cmd

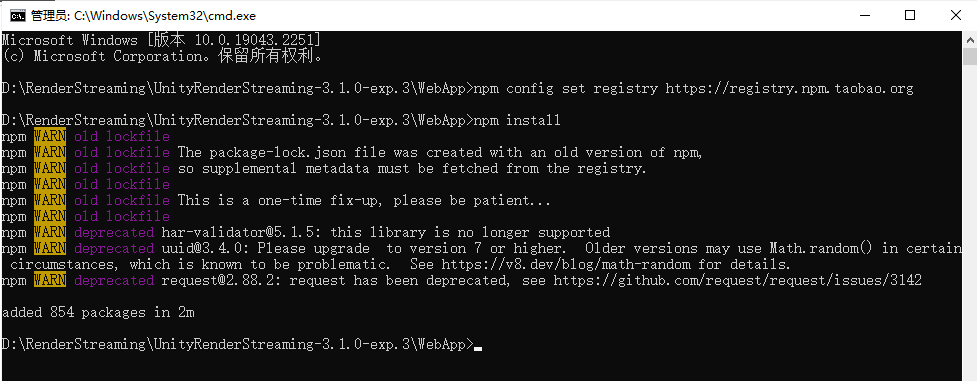

如果安装过慢或者超时导致失败,可以先输入

npm config set registry https://registry.npm.taobao.org

更换npm的安装镜像源为国内

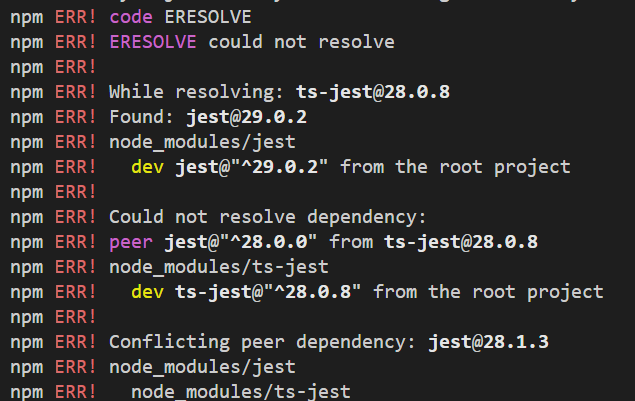

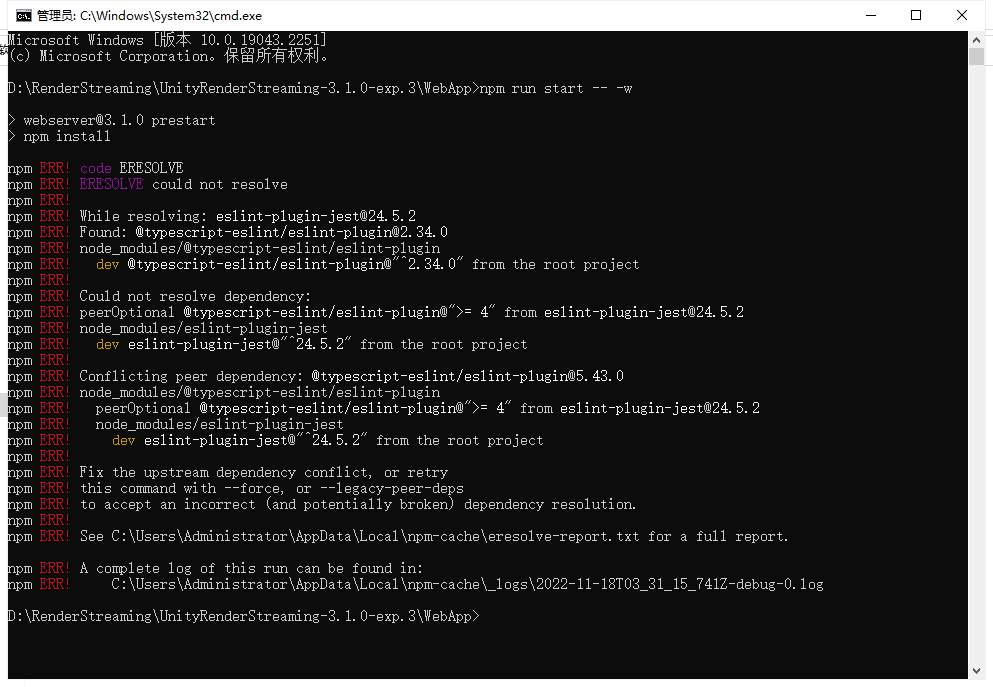

如果出现如下错误,可以输入npm config set legacy-peer-deps true,之后再次输入npm install或者npm i安装依赖**

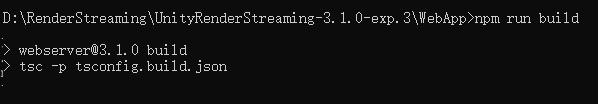

5.构建Server,输入npm run build

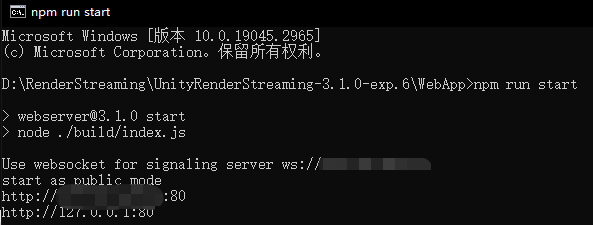

6.启动Server,输入npm run start

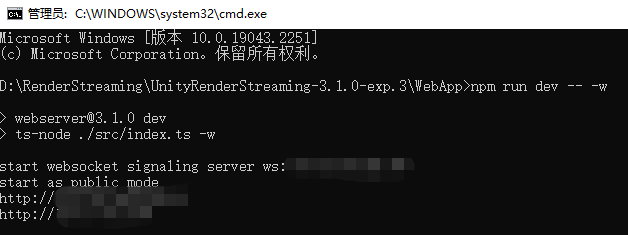

如果启动异常,如下图,使用npm run dev启动

启动后如下,暂不清楚为何出现上述情况,有了解该原因的小伙伴,可以告诉我一下,谢谢。

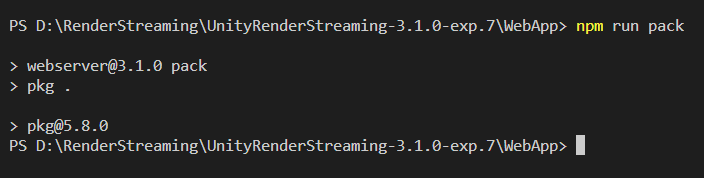

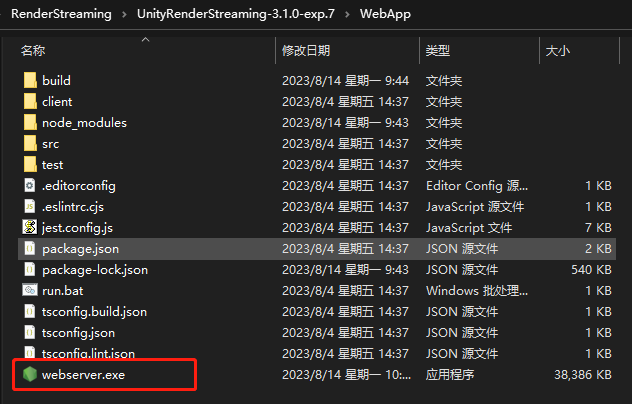

7.打包,npm run pack

双击启动,或者.\webserver.exe

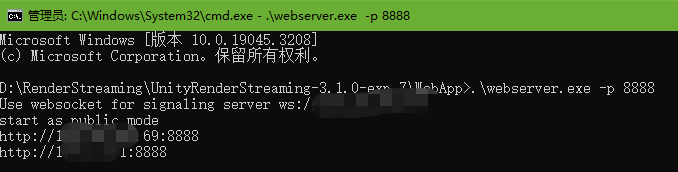

8.自定义端口号

9.根据自己需求修改web文件

Web和Unity相互发自定义消息

发送消息使用WebRTC中的RTCDataChannel的Send函数,接收消息使用OnMessage

1.Unity向Web发送消息

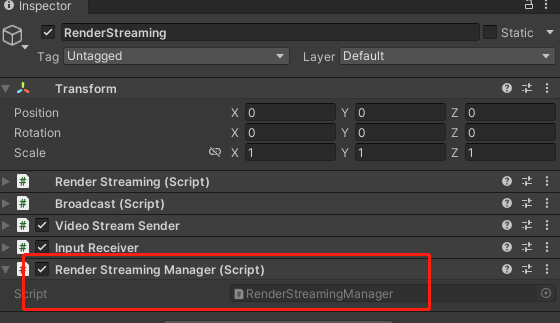

Unity项目中创建脚本如下,使用inputReceiver中的Channel对象进行Send

using Unity.RenderStreaming; using UnityEngine; public class RenderStreamingManager : MonoBehaviour { private InputReceiver inputReceiver; private void Awake() { inputReceiver = transform.GetComponent(); } public void SendMsg(string msg) { inputReceiver.Channel.Send(msg); } private void Update() { if (Input.GetKeyDown(KeyCode.P)) { SendMsg("Send Msg to web"); } } }记得添加到项目中

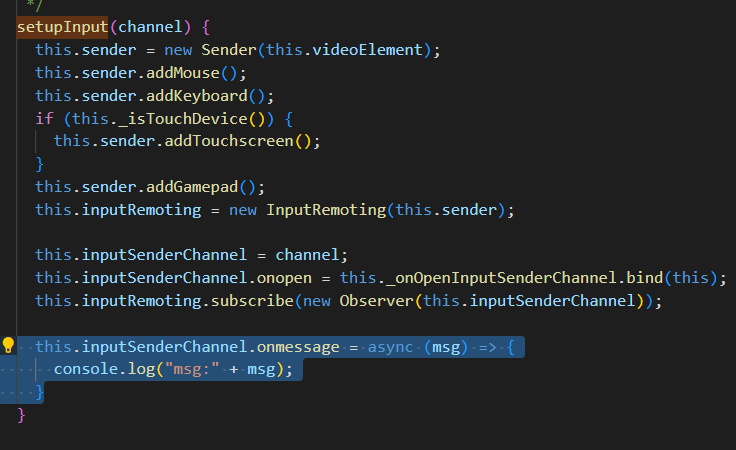

Web中接收如下

找到videoplayer.js文件中的inputSenderChannel对象,使用onmessage接收信息

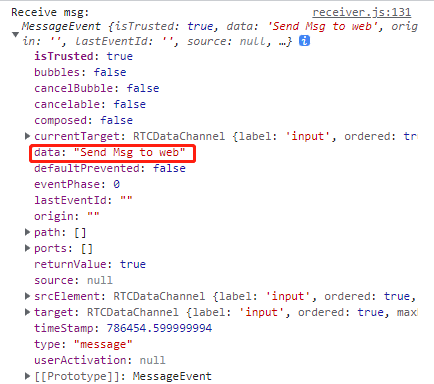

收到的消息内容如下,字符串会在data中

2.web向Unity发送消息

web中使用send函数发送消息

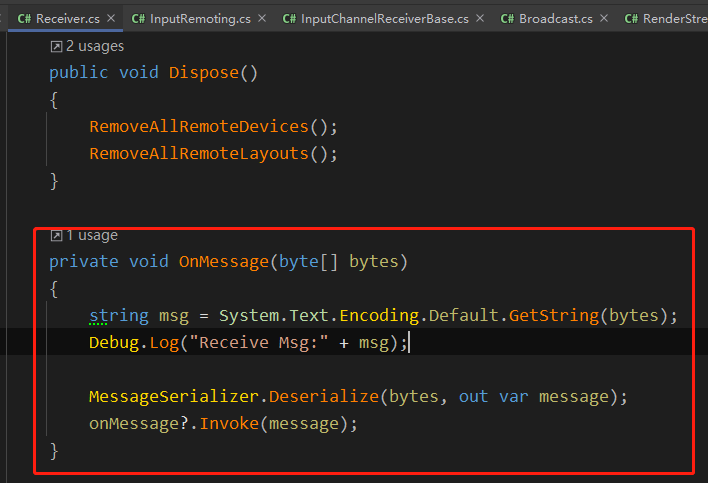

this.inputSenderChannel.send("msg to unity");Unity中Receiver.cs中OnMessage中通过RTCDataChannel接收了web发送的消息

企业项目如何应用

1.外网访问清晰和流畅之间的抉择

在服务器带宽允许的前提下,尽可能的提高传输质量,如下,是我在项目中的设置

可以根据自己带宽,调整DepathBuffer,StreamingSize,Framerate,Bitrate,ScaleResolution。

也可以获取VideoStreamSender组件,运行时修改对应参数,方便发布后测试。

VideoStreamSender videoStreamSender = transform.GetComponent(); videoStreamSender.SetTextureSize(new Vector2Int(gameConfig.RSResolutionWidth,gameConfig.RSResolutionHeight)); videoStreamSender.SetFrameRate(gameConfig.RSFrameRate); videoStreamSender.SetScaleResolutionDown(gameConfig.RSScale); videoStreamSender.SetBitrate((uint)gameConfig.RSMinBitRate,(uint)gameConfig.RSMaxBitRate);

2.web制作按钮来替换Unity的按钮操作

因为目前受限于分辨率的变化导致Unity的按钮经常不能正确的进行点击,所以由Web同学制作对应的按钮,通过Web向Unity发消息的形式,响应对应按钮操作。

我这边是为所有按钮配置id,web发送按钮id,Unity接收后遍历配置表,进行Invoke

private void OnClickButton(int elementId) { // 在配置表中根据id,获取UIName,FunctionName,Para List uiMatchFuncModels = UniversalConfig.Instance.GetUIMatchModels(); foreach (var item in uiMatchFuncModels.Where(item => item.id == elementId)) { ExecuteFunction(item); break; } } private void ExecuteFunction(UIMatchFuncModel matchFuncModel) { UIPanel uiPanel = UIKit.GetPanel(matchFuncModel.uiName); if (uiPanel == null) { uiPanel = UIKit.OpenPanel(matchFuncModel.uiName); } uiPanel.Invoke(matchFuncModel.functionName,0); }3.分辨率同步,参考下面iOS、Android中的分辨率同步方式

参考下面iOS、Android

参考下面iOS、Android

参考下面iOS、Android

4.手机端访问默认竖屏显示横屏画面注意事项

手机端由于竖屏展示画面过小,通常都是竖屏状态下展示横屏内容,因此:

1.上一点提到的分辨率设置时需要注意web端的设备是横屏还是竖屏;

2.项目中如果有滑动操作,需要注意根据横竖屏来反转操作。

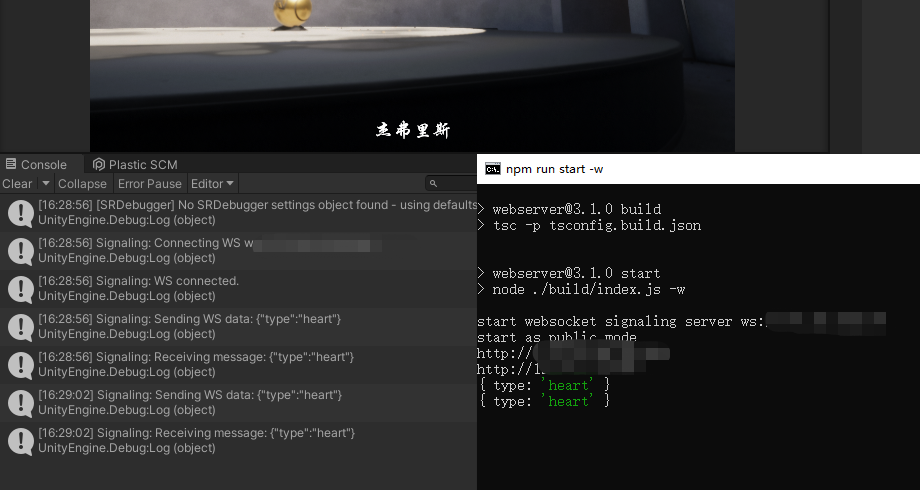

5.检测Unity端是否断开服务

项目中有过每隔半小时到一小时服务就会断开的情况,因此添加了心跳检测,进行重连操作

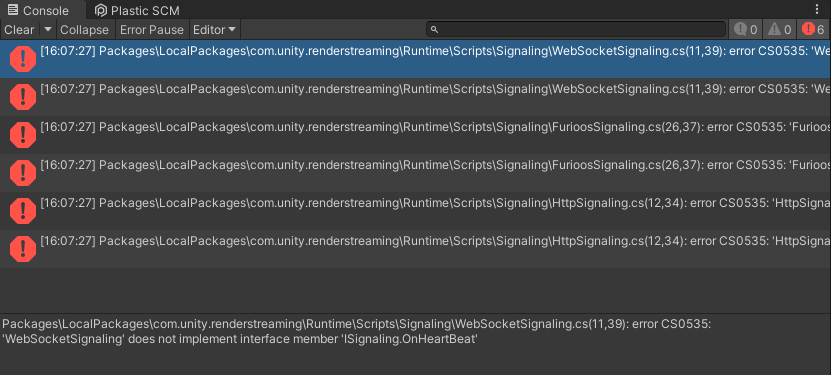

如下,在ISignaling中添加OnHeartBeatHandler

using Unity.WebRTC; namespace Unity.RenderStreaming.Signaling { public delegate void OnStartHandler(ISignaling signaling); public delegate void OnConnectHandler(ISignaling signaling, string connectionId, bool polite); public delegate void OnDisconnectHandler(ISignaling signaling, string connectionId); public delegate void OnOfferHandler(ISignaling signaling, DescData e); public delegate void OnAnswerHandler(ISignaling signaling, DescData e); public delegate void OnIceCandidateHandler(ISignaling signaling, CandidateData e); // heart public delegate void OnHeartBeatHandler(ISignaling signaling); public interface ISignaling { void Start(); void Stop(); event OnStartHandler OnStart; event OnConnectHandler OnCreateConnection; event OnDisconnectHandler OnDestroyConnection; event OnOfferHandler OnOffer; event OnAnswerHandler OnAnswer; event OnIceCandidateHandler OnIceCandidate; // heart event OnHeartBeatHandler OnHeartBeat; string Url { get; } void OpenConnection(string connectionId); void CloseConnection(string connectionId); void SendOffer(string connectionId, RTCSessionDescription offer); void SendAnswer(string connectionId, RTCSessionDescription answer); void SendCandidate(string connectionId, RTCIceCandidate candidate); // heart void SendHeartBeat(); } }修改报错

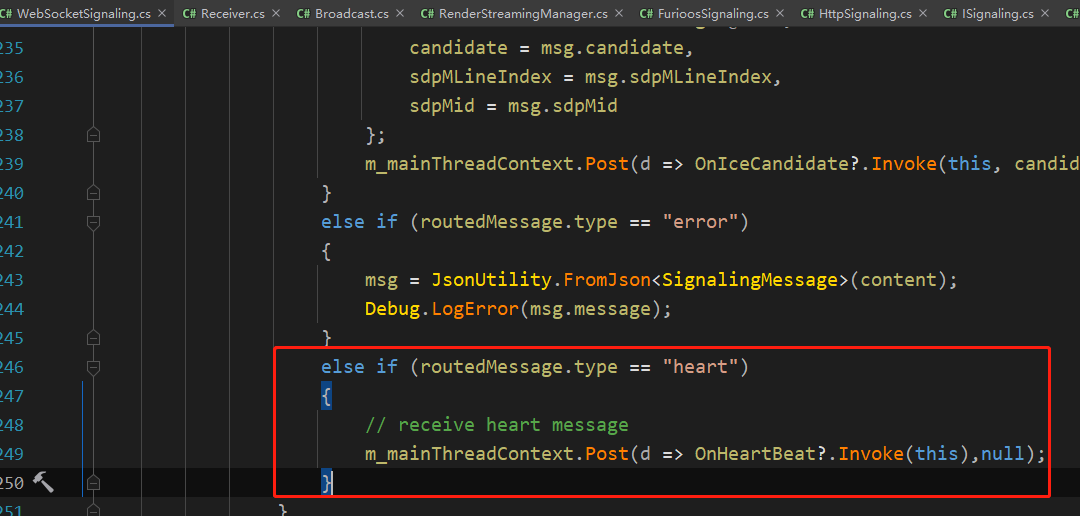

WebSocketSignaling中的SendHeartBeat函数中添加如下内容

public void SendHeartBeat() { this.WSSend($"{{\"type\":\"heart\"}}"); }创建RenderStreamingManager脚本,代码如下

using System.Collections; using System.Threading; using Unity.RenderStreaming; using Unity.RenderStreaming.Signaling; using UnityEngine; public class RenderStreamingManager : MonoBehaviour { private RenderStreaming _renderStreaming; private VideoStreamSender _videoStreamSender; private ISignaling _signaling; private InputReceiver _inputReceiver; // heartbeat check private Coroutine _heartBeatCoroutine; private bool _isReceiveHeart; private float _heartBeatInterval; private void Awake() { // websocket SignalingSettings signalingSettings = new WebSocketSignalingSettings($"{"ws"}://{_renderStreamingConfig.IP}:{_renderStreamingConfig.Port}"); _signaling = new WebSocketSignaling(signalingSettings,SynchronizationContext.Current); if (_signaling != null) { _renderStreaming = transform.GetComponent(); _videoStreamSender = transform.GetComponent(); _inputReceiver = transform.GetComponent(); _renderStreaming.Run(_signaling); // heart beat _signaling.OnStart += OnWebSocketStart; _signaling.OnHeartBeat += OnHeatBeat; } } #region HeartBeat private void OnWebSocketStart(ISignaling signaling) { StartHeartCheck(); } private void OnHeatBeat(ISignaling signaling) { _isReceiveHeart = true; } private void StartHeartCheck() { _isReceiveHeart = false; _signaling.SendHeartBeat(); _heartBeatCoroutine = StartCoroutine(HeartBeatCheck()); } private IEnumerator HeartBeatCheck() { yield return new WaitForSeconds(5); if (!_isReceiveHeart) { // not receive msg Reconnect(); } else { StartHeartCheck(); } } private void Reconnect() { StartCoroutine(ReconnectDelay()); } private IEnumerator ReconnectDelay() { _signaling.Stop(); yield return new WaitForSeconds(1); _signaling.Start(); } #endregion }记得关闭RenderStreaming中的RunOnAwake

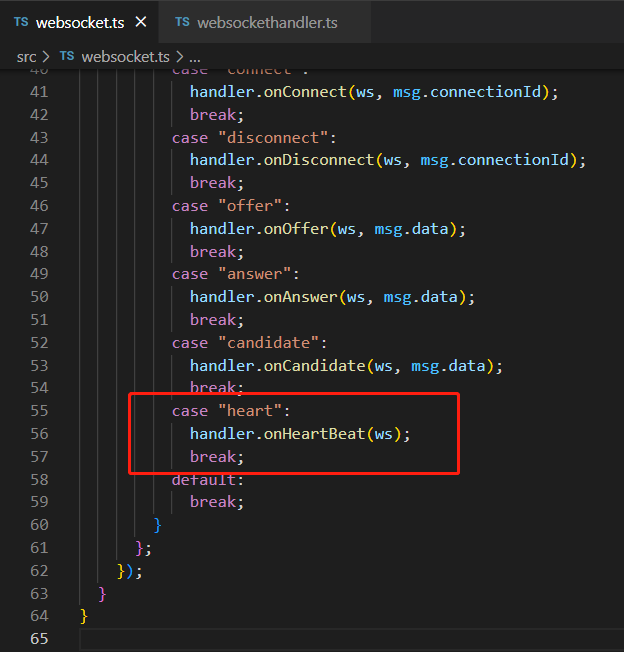

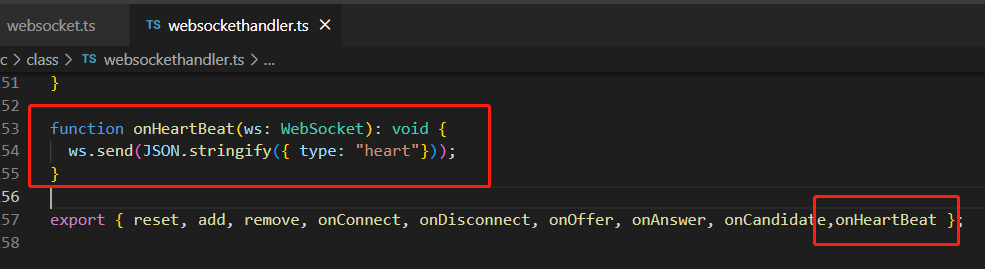

WebServer中找到websocket.ts和websockethandler.ts,添加如下内容

重新Build服务后启动Server,这样就可以Unity就可以和Server通过WebSocket的形式收发心跳消息

6.获取是否有用户连接成功

在RenderStreamingManager中绑定事件

private void Awake() { // connect & disconnect _inputReceiver.OnStartedChannel += OnStartChannel; _inputReceiver.OnStoppedChannel += OnStopChannel; } private void OnStartChannel(string connectionid) { } private void OnStopChannel(string connectionid) { }iOS、Android端如何制作

1.参考Sample中的Receiver场景

2.自定义Receiver场景内容

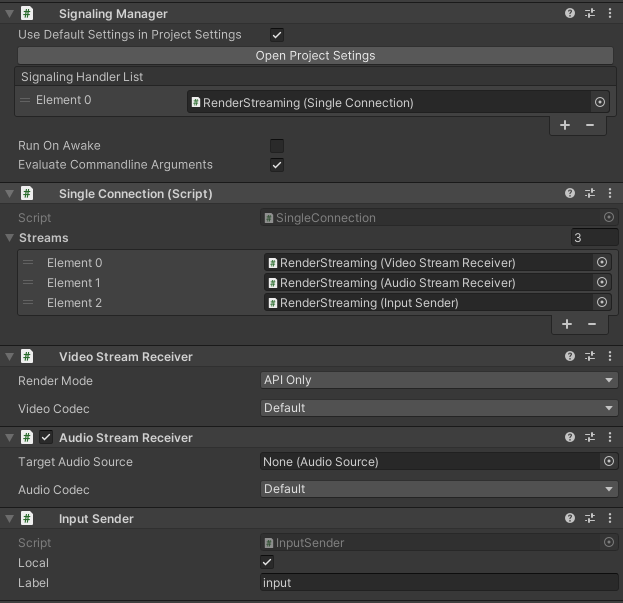

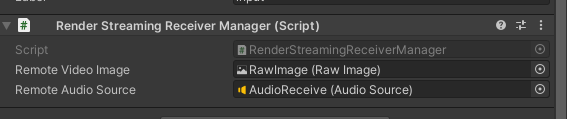

如图创建物体,添加SignalingManager SingleConnection VideoStreamReceiver InputSender组件

AudioStreamReceiver是声音相关的,根据自己需要添加

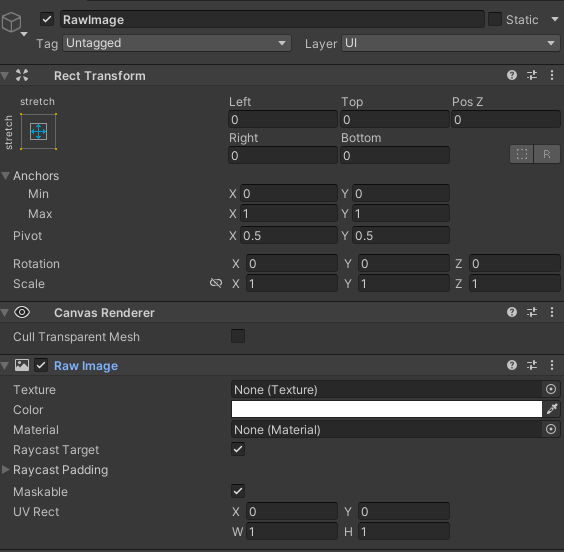

新建Canvas,创建RawImage,作为接收Texture的载体

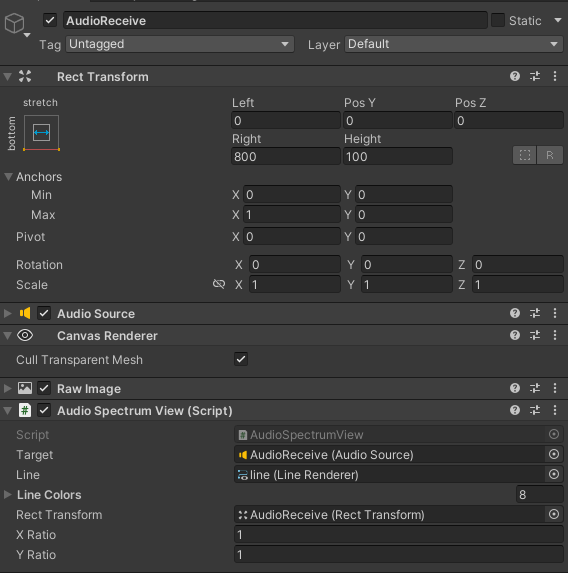

Audio相关的内容,根据Sample中配置,不需要声音相关的可以忽略

3.创建管理脚本

Awake里获取组件和绑定事件

StartConnect调用开始连接websocket,连接成功后开始webrtc的连接,之后VideoStreamReceiver绑定的OnUpdateReceiveTexture事件更新RawImage里面的画面。

OnStartedChannel里添加了发送当前设备分辨率信息,消息结构可自定义,我这边创建了ScreenMessage,添加了宽高信息,发送到客户端。

完整移动端脚本如下:

using System; using System.Threading; using Unity.RenderStreaming; using Unity.RenderStreaming.Signaling; using UnityEngine; using UnityEngine.UI; namespace Test.RenderStream { [RequireComponent(typeof(SignalingManager))] [RequireComponent(typeof(SingleConnection))] [RequireComponent(typeof(VideoStreamReceiver))] [RequireComponent(typeof(InputSender))] public class RenderStreamingReceiverManager : MonoSingleton { private SignalingManager _renderStreaming; private VideoStreamReceiver _videoStreamReceiver; private AudioStreamReceiver _audioStreamReceiver; private InputSender _inputSender; private SingleConnection _connection; [SerializeField] private RawImage remoteVideoImage; [SerializeField] private AudioSource remoteAudioSource; private ISignaling _signaling; private string _connectionId; private Vector2 lastSize; private void Awake() { // Get Component _renderStreaming = transform.GetComponent(); _videoStreamReceiver = transform.GetComponent(); _inputSender = transform.GetComponent(); _connection = transform.GetComponent(); // Connect DisConnect _inputSender.OnStartedChannel += OnStartedChannel; _inputSender.OnStoppedChannel += OnStopChannel; _videoStreamReceiver.OnUpdateReceiveTexture += OnUpdateReceiveTexture; _audioStreamReceiver = transform.GetComponent(); if (_audioStreamReceiver) { _audioStreamReceiver.OnUpdateReceiveAudioSource += source => { source.loop = true; source.Play(); }; } } private void Update() { // Call SetInputChange if window size is changed. var size = remoteVideoImage.rectTransform.sizeDelta; if (lastSize == size) return; lastSize = size; CalculateInputRegion(); } public void StartConnect() { SignalingSettings signalingSettings = new WebSocketSignalingSettings($"{"ws"}://{RenderStreamingDataManager.Instance.GetIP()}:{RenderStreamingDataManager.Instance.GetPort()}"); _signaling = new WebSocketSignaling(signalingSettings,SynchronizationContext.Current); _signaling.OnStart += OnWebSocketStart; _renderStreaming.Run(_signaling); } public void StopConnect() { StopRenderStreaming(); _signaling.OnStart -= OnWebSocketStart; _renderStreaming.Stop(); } private void OnWebSocketStart(ISignaling signaling) { StartRenderStreaming(); } private void StartRenderStreaming() { if (string.IsNullOrEmpty(_connectionId)) { _connectionId = Guid.NewGuid().ToString("N"); } if (_audioStreamReceiver) { _audioStreamReceiver.targetAudioSource = remoteAudioSource; } _connection.CreateConnection(_connectionId); } private void StopRenderStreaming() { _connection.DeleteConnection(_connectionId); _connectionId = String.Empty; } void OnUpdateReceiveTexture(Texture texture) { remoteVideoImage.texture = texture; CalculateInputRegion(); } void OnStartedChannel(string connectionId) { Debug.Log("连接成功:" + connectionId); // 发送当前设备的分辨率到Client SendScreenToClient(); CalculateInputRegion(); } private void OnStopChannel(string connectionId) { Debug.Log("断开连接:" + connectionId); } private void SendScreenToClient() { ScreenMessage screenMessage = new ScreenMessage(Screen.width, Screen.height); string msg = JsonUtility.ToJson(screenMessage); SendMsg(msg); } private void SendMsg(string msg) { _inputSender.Channel.Send(msg); } private void CalculateInputRegion() { if (_inputSender == null || !_inputSender.IsConnected || remoteVideoImage.texture == null) return; var (region, size) = remoteVideoImage.GetRegionAndSize(); _inputSender.CalculateInputResion(region, size); _inputSender.EnableInputPositionCorrection(true); } } static class InputSenderExtension { public static (Rect, Vector2Int) GetRegionAndSize(this RawImage image) { // correct pointer position Vector3[] corners = new Vector3[4]; image.rectTransform.GetWorldCorners(corners); Camera camera = image.canvas.worldCamera; var corner0 = RectTransformUtility.WorldToScreenPoint(camera, corners[0]); var corner2 = RectTransformUtility.WorldToScreenPoint(camera, corners[2]); var region = new Rect( corner0.x, corner0.y, corner2.x - corner0.x, corner2.y - corner0.y ); var size = new Vector2Int(image.texture.width, image.texture.height); return (region, size); } } }Message定义结构,根据自己喜好编写

public enum MessageType { Screen } public class RenderStreamingMessage { public MessageType MessageType; } public class ScreenMessage : RenderStreamingMessage { public int Width; public int Height; public ScreenMessage(int width, int height) { MessageType = MessageType.Screen; Width = width; Height = height; } }4.客户端收到ScreenMessage后的屏幕自适应处理

收到消息后,解析拿到宽高,按照移动设备的分辨率计算得到合适的分辨率

使用_videoStreamSender.SetTextureSize修改输出分辨率

使用CalculateInputRegion来修正分辨率,web端分辨率修改和这个一致。

private void OnMessage(byte[] bytes) { string msg = System.Text.Encoding.Default.GetString(bytes); if (string.IsNullOrEmpty(msg) || !msg.Contains("MessageType")) { return; } RenderStreamingMessage messageObject = JsonUtility.FromJson(msg); if (messageObject == null) { return; } switch (messageObject.MessageType) { case MessageType.Screen: ScreenMessage screenMessage = JsonUtility.FromJson(msg); ResetOutputScreen(screenMessage); break; default: break; } } private void ResetOutputScreen(ScreenMessage screenMessage) { int width = screenMessage.Width; int height = screenMessage.Height; float targetWidth, targetHeight; int configWidth = 1920; int configHeight = 1080; float configRate = (float) configWidth / configHeight; float curRate = (float) width / height; if (curRate > configRate) { targetWidth = configWidth; targetHeight = targetWidth / curRate; } else { targetHeight = configHeight; targetWidth = targetHeight * curRate; } _videoStreamSender.SetTextureSize(new Vector2Int((int) targetWidth, (int) targetHeight)); // 这一步是分辨率偏移修正的关键代码 CalculateInputRegion(); } private void CalculateInputRegion() { if (!_inputReceiver.IsConnected) return; var width = (int)(_videoStreamSender.width / _videoStreamSender.scaleResolutionDown); var height = (int)(_videoStreamSender.height / _videoStreamSender.scaleResolutionDown); _inputReceiver.CalculateInputRegion(new Vector2Int(width, height), new Rect(0, 0, Screen.width, Screen.height)); _inputReceiver.SetEnableInputPositionCorrection(true); }完整PC端代码如下:

using System.Collections; using System.Threading; using Unity.RenderStreaming; using Unity.RenderStreaming.Signaling; using UnityEngine; namespace Test.RenderStream { [RequireComponent(typeof(SignalingManager))] [RequireComponent(typeof(Broadcast))] [RequireComponent(typeof(VideoStreamSender))] [RequireComponent(typeof(InputReceiver))] public class RenderStreamingManager : MonoBehaviour { private SignalingManager _renderStreaming; private VideoStreamSender _videoStreamSender; private InputReceiver _inputReceiver; private RenderStreamingConfig _renderStreamingConfig; private ISignaling _signaling; // heartbeat check private Coroutine _heartBeatCoroutine; private bool _isReceiveHeart; private int lastWidth; private int lastHeight; private void Awake() { // Load Config _renderStreamingConfig = RenderStreamingDataManager.Instance.GetRenderStreamingConfig(); // Get Component _renderStreaming = transform.GetComponent(); _videoStreamSender = transform.GetComponent(); _inputReceiver = transform.GetComponent(); // Connect DisConnect _inputReceiver.OnStartedChannel += OnStartChannel; _inputReceiver.OnStoppedChannel += OnStopChannel; } private void Start() { if (!_renderStreamingConfig.IsOn) return; SignalingSettings signalingSettings = new WebSocketSignalingSettings($"{"ws"}://{_renderStreamingConfig.IP}:{_renderStreamingConfig.Port}"); _signaling = new WebSocketSignaling(signalingSettings,SynchronizationContext.Current); // Settings by Config _videoStreamSender.SetTextureSize(new Vector2Int(_renderStreamingConfig.ResolutionWidth, _renderStreamingConfig.ResolutionHeight)); _videoStreamSender.SetFrameRate(_renderStreamingConfig.FrameRate); _videoStreamSender.SetScaleResolutionDown(_renderStreamingConfig.Scale); _videoStreamSender.SetBitrate((uint) _renderStreamingConfig.MinBitRate, (uint) _renderStreamingConfig.MaxBitRate); _renderStreaming.Run(_signaling); // heart beat _signaling.OnStart += OnWebSocketStart; _signaling.OnHeartBeat += OnHeatBeat; } private void Update() { // Call SetInputChange if window size is changed. var width = Screen.width; var height = Screen.height; if (lastWidth == width && lastHeight == height) return; lastWidth = width; lastHeight = height; CalculateInputRegion(); } private void OnStartChannel(string connectionId) { _inputReceiver.Channel.OnMessage += OnMessage; CalculateInputRegion(); } private void OnStopChannel(string connectionId) { } private void CalculateInputRegion() { if (!_inputReceiver.IsConnected) return; var width = (int)(_videoStreamSender.width / _videoStreamSender.scaleResolutionDown); var height = (int)(_videoStreamSender.height / _videoStreamSender.scaleResolutionDown); _inputReceiver.CalculateInputRegion(new Vector2Int(width, height), new Rect(0, 0, Screen.width, Screen.height)); _inputReceiver.SetEnableInputPositionCorrection(true); } #region HeartBeat private void OnWebSocketStart(ISignaling signaling) { StartHeartCheck(); } private void OnHeatBeat(ISignaling signaling) { _isReceiveHeart = true; } private void StartHeartCheck() { _isReceiveHeart = false; _signaling.SendHeartBeat(); _heartBeatCoroutine = StartCoroutine(HeartBeatCheck()); } private IEnumerator HeartBeatCheck() { yield return new WaitForSeconds(_renderStreamingConfig.HeartRate); if (!_isReceiveHeart) { // not receive msg Reconnect(); } else { StartHeartCheck(); } } private void Reconnect() { StartCoroutine(ReconnectDelay()); } private IEnumerator ReconnectDelay() { _signaling.Stop(); yield return new WaitForSeconds(1); _signaling.Start(); } #endregion #region Message private void OnMessage(byte[] bytes) { string msg = System.Text.Encoding.Default.GetString(bytes); if (string.IsNullOrEmpty(msg) || !msg.Contains("MessageType")) { return; } RenderStreamingMessage messageObject = JsonUtility.FromJson(msg); if (messageObject == null) { return; } switch (messageObject.MessageType) { case MessageType.Screen: ScreenMessage screenMessage = JsonUtility.FromJson(msg); ResetOutputScreen(screenMessage); break; default: break; } } public void SendMsg(string msg) { _inputReceiver.Channel.Send(msg); } #endregion private void ResetOutputScreen(ScreenMessage screenMessage) { int width = screenMessage.Width; int height = screenMessage.Height; float targetWidth, targetHeight; Debug.Log("修改前的分辨率:" + width + "X" + height); int configWidth = _renderStreamingConfig.ResolutionWidth; int configHeight = _renderStreamingConfig.ResolutionHeight; float configRate = (float) configWidth / configHeight; float curRate = (float) width / height; if (curRate > configRate) { // width targetWidth = configWidth; targetHeight = targetWidth / curRate; } else { targetHeight = configHeight; targetWidth = targetHeight * curRate; } Debug.Log("修改后的分辨率:" + targetWidth + "X" + targetHeight); _videoStreamSender.SetTextureSize(new Vector2Int((int) targetWidth, (int) targetHeight)); CalculateInputRegion(); } } }RenderStreamingConfig结构

public class RenderStreamingConfig { public bool IsOn; public string IP; public int Port; public int ResolutionWidth; public int ResolutionHeight; public int FrameRate; public int Scale; public int MinBitRate; public int MaxBitRate; public int HeartRate; }5.发布前的项目设置

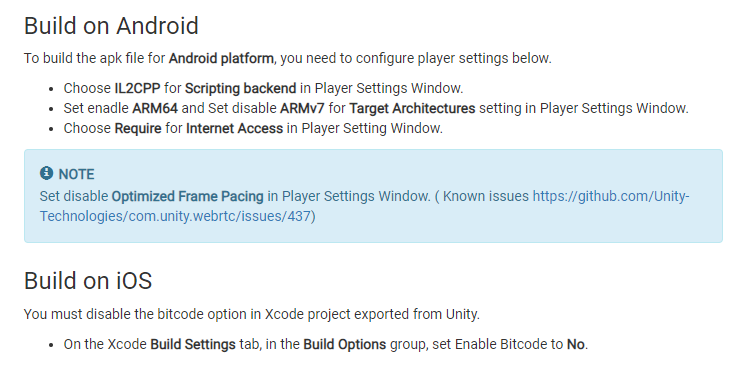

根据WebRTC文档中的要求更改

6.发布测试

已知问题

发送消息会导致PC端的内存增长,后续跟进内存异常问题