Android下SF合成流程重学习之GPU合成

Android下SF合成流程重学习之GPU合成

引言

SurfaceFlinger中的图层选择GPU合成(CLIENT合成方式)时,会把待合成的图层Layers通过renderengine(SkiaGLRenderEngine)绘制到一块GraphicBuffer中,然后把这块GraphicBuffer图形缓存通过调用setClientTarget传递给HWC模块,HWC进一步处理后把这个GraphicBuffer中的图像呈现到屏幕上。

本篇文章,我们先聚焦如下量点做介绍:

- 用于存储GPU合成后的图形数据的GraphicBuffer是从哪里来的?

- GPU合成中,SF执行的主要逻辑是什么?

一.从dumpsys SurfaceFlinger中的信息谈起

如果你查看过dumpsys SurfaceFlinger的信息,也许你注意过一些GraphicBufferAllocator/GraphicBufferMapper打印出的一些信息,这些信息记录了所有通过Gralloc模块allocate和import的图形缓存的信息。

如下是在我的平台下截取的dumpsys SurfaceFlinger部分信息:

GraphicBufferAllocator buffers: Handle | Size | W (Stride) x H | Layers | Format | Usage | Requestor 0xf3042b90 | 8100.00 KiB | 1920 (1920) x 1080 | 1 | 1 | 0x 1b00 | FramebufferSurface 0xf3042f30 | 8100.00 KiB | 1920 (1920) x 1080 | 1 | 1 | 0x 1b00 | FramebufferSurface 0xf3046020 | 8100.00 KiB | 1920 (1920) x 1080 | 1 | 1 | 0x 1b00 | FramebufferSurface Total allocated by GraphicBufferAllocator (estimate): 24300.00 KB Imported gralloc buffers: + name:FramebufferSurface, id:e100000000, size:8.3e+03KiB, w/h:780x438, usage: 0x40001b00, req fmt:5, fourcc/mod:875713089/576460752303423505, dataspace: 0x0, compressed: true planes: B/G/R/A: w/h:780x440, stride:1e00 bytes, size:818000 + name:FramebufferSurface, id:e100000001, size:8.3e+03KiB, w/h:780x438, usage: 0x40001b00, req fmt:5, fourcc/mod:875713089/576460752303423505, dataspace: 0x0, compressed: true planes: B/G/R/A: w/h:780x440, stride:1e00 bytes, size:818000 + name:FramebufferSurface, id:e100000002, size:8.3e+03KiB, w/h:780x438, usage: 0x40001b00, req fmt:5, fourcc/mod:875713089/576460752303423505, dataspace: 0x0, compressed: true planes: B/G/R/A: w/h:780x440, stride:1e00 bytes, size:818000 Total imported by gralloc: 5e+04KiB上面的信息中可以看到一些儿冥冥之中貌似、似乎、好像很有意思的字眼:FramebufferSurface。

作为Requestor的FramebufferSurface去请求分配了三块图形缓存,还规定了width、height、format、usage等信息。

如上你看到的这3块GraphicBuffer,就是用来存储CPU合成后的图形数据的。

二.SF为GPU合成做的准备

俗话说的好,不打没有准备的仗。SF也是如此,为了做好GPU的合成,SF会在启动的时候就搭建好EGL环境,为后续GPU合成做好准备。具体逻辑如下:

文件:frameworks/native/services/surfaceflinger/SurfaceFlinger.cpp void SurfaceFlinger::init() { ALOGI( "SurfaceFlinger's main thread ready to run. " "Initializing graphics H/W..."); Mutex::Autolock _l(mStateLock); // Get a RenderEngine for the given display / config (can't fail) // TODO(b/77156734): We need to stop casting and use HAL types when possible. // Sending maxFrameBufferAcquiredBuffers as the cache size is tightly tuned to single-display. // 创建RenderEngine对象 mCompositionEngine->setRenderEngine(renderengine::RenderEngine::create( renderengine::RenderEngineCreationArgs::Builder() .setPixelFormat(static_cast(defaultCompositionPixelFormat)) .setImageCacheSize(maxFrameBufferAcquiredBuffers) .setUseColorManagerment(useColorManagement) .setEnableProtectedContext(enable_protected_contents(false)) .setPrecacheToneMapperShaderOnly(false) .setSupportsBackgroundBlur(mSupportsBlur) .setContextPriority(useContextPriority ? renderengine::RenderEngine::ContextPriority::HIGH : renderengine::RenderEngine::ContextPriority::MEDIUM) .build())); 文件:frameworks/native/libs/renderengine/RenderEngine.cpp std::unique_ptr RenderEngine::create(const RenderEngineCreationArgs& args) { char prop[PROPERTY_VALUE_MAX]; // 如果PROPERTY_DEBUG_RENDERENGINE_BACKEND 属性不设,则默认是gles类型 property_get(PROPERTY_DEBUG_RENDERENGINE_BACKEND, prop, "gles"); if (strcmp(prop, "gles") == 0) { ALOGD("RenderEngine GLES Backend"); // 创建GLESRenderEngine对象 return renderengine::gl::GLESRenderEngine::create(args); } ALOGE("UNKNOWN BackendType: %s, create GLES RenderEngine.", prop); return renderengine::gl::GLESRenderEngine::create(args); } 文件:frameworks/native/libs/renderengine/gl/GLESRenderEngine.cpp std::unique_ptr GLESRenderEngine::create(const RenderEngineCreationArgs& args) { // initialize EGL for the default display // 获得EGLDisplay EGLDisplay display = eglGetDisplay(EGL_DEFAULT_DISPLAY); if (!eglInitialize(display, nullptr, nullptr)) { LOG_ALWAYS_FATAL("failed to initialize EGL"); } // 查询EGL版本信息 const auto eglVersion = eglQueryStringImplementationANDROID(display, EGL_VERSION); if (!eglVersion) { checkGlError(__FUNCTION__, __LINE__); LOG_ALWAYS_FATAL("eglQueryStringImplementationANDROID(EGL_VERSION) failed"); } //查询EGL支持哪些拓展 const auto eglExtensions = eglQueryStringImplementationANDROID(display, EGL_EXTENSIONS); if (!eglExtensions) { checkGlError(__FUNCTION__, __LINE__); LOG_ALWAYS_FATAL("eglQueryStringImplementationANDROID(EGL_EXTENSIONS) failed"); } //根据支持的拓展设置属性,目前来看所有的属性都为true GLExtensions& extensions = GLExtensions::getInstance(); extensions.initWithEGLStrings(eglVersion, eglExtensions); // The code assumes that ES2 or later is available if this extension is // supported. EGLConfig config = EGL_NO_CONFIG; if (!extensions.hasNoConfigContext()) { config = chooseEglConfig(display, args.pixelFormat, /*logConfig*/ true); } bool useContextPriority = extensions.hasContextPriority() && args.contextPriority == ContextPriority::HIGH; EGLContext protectedContext = EGL_NO_CONTEXT; if (args.enableProtectedContext && extensions.hasProtectedContent()) { protectedContext = createEglContext(display, config, nullptr, useContextPriority, Protection::PROTECTED); ALOGE_IF(protectedContext == EGL_NO_CONTEXT, "Can't create protected context"); } // 创建非protect的EglContext EGLContext ctxt = createEglContext(display, config, protectedContext, useContextPriority, Protection::UNPROTECTED); LOG_ALWAYS_FATAL_IF(ctxt == EGL_NO_CONTEXT, "EGLContext creation failed"); EGLSurface dummy = EGL_NO_SURFACE; // 支持该属性,不走if逻辑 if (!extensions.hasSurfacelessContext()) { dummy = createDummyEglPbufferSurface(display, config, args.pixelFormat, Protection::UNPROTECTED); LOG_ALWAYS_FATAL_IF(dummy == EGL_NO_SURFACE, "can't create dummy pbuffer"); } // eglMakeCurrent 将 EGLDisplay和EglContext 绑定 EGLBoolean success = eglMakeCurrent(display, dummy, dummy, ctxt); LOG_ALWAYS_FATAL_IF(!success, "can't make dummy pbuffer current"); ... std::unique_ptr engine; switch (version) { case GLES_VERSION_1_0: case GLES_VERSION_1_1: LOG_ALWAYS_FATAL("SurfaceFlinger requires OpenGL ES 2.0 minimum to run."); break; case GLES_VERSION_2_0: case GLES_VERSION_3_0: // GLESRenderEngine 初始化 engine = std::make_unique(args, display, config, ctxt, dummy, protectedContext, protectedDummy); break; } ... } GLESRenderEngine::GLESRenderEngine(const RenderEngineCreationArgs& args, EGLDisplay display, EGLConfig config, EGLContext ctxt, EGLSurface dummy, EGLContext protectedContext, EGLSurface protectedDummy) : renderengine::impl::RenderEngine(args), mEGLDisplay(display), mEGLConfig(config), mEGLContext(ctxt), mDummySurface(dummy), mProtectedEGLContext(protectedContext), mProtectedDummySurface(protectedDummy), mVpWidth(0), mVpHeight(0), mFramebufferImageCacheSize(args.imageCacheSize), mUseColorManagement(args.useColorManagement) { // 查询可支持最大的纹理尺寸和视图大小 glGetIntegerv(GL_MAX_TEXTURE_SIZE, &mMaxTextureSize); glGetIntegerv(GL_MAX_VIEWPORT_DIMS, mMaxViewportDims); //像素数据按4字节对齐 glPixelStorei(GL_UNPACK_ALIGNMENT, 4); glPixelStorei(GL_PACK_ALIGNMENT, 4); ... // 色彩空间相关设置,遇到具体场景再分析 if (mUseColorManagement) { const ColorSpace srgb(ColorSpace::sRGB()); const ColorSpace displayP3(ColorSpace::DisplayP3()); const ColorSpace bt2020(ColorSpace::BT2020()); // no chromatic adaptation needed since all color spaces use D65 for their white points. mSrgbToXyz = mat4(srgb.getRGBtoXYZ()); mDisplayP3ToXyz = mat4(displayP3.getRGBtoXYZ()); mBt2020ToXyz = mat4(bt2020.getRGBtoXYZ()); mXyzToSrgb = mat4(srgb.getXYZtoRGB()); mXyzToDisplayP3 = mat4(displayP3.getXYZtoRGB()); mXyzToBt2020 = mat4(bt2020.getXYZtoRGB()); // Compute sRGB to Display P3 and BT2020 transform matrix. // NOTE: For now, we are limiting output wide color space support to // Display-P3 and BT2020 only. mSrgbToDisplayP3 = mXyzToDisplayP3 * mSrgbToXyz; mSrgbToBt2020 = mXyzToBt2020 * mSrgbToXyz; // Compute Display P3 to sRGB and BT2020 transform matrix. mDisplayP3ToSrgb = mXyzToSrgb * mDisplayP3ToXyz; mDisplayP3ToBt2020 = mXyzToBt2020 * mDisplayP3ToXyz; // Compute BT2020 to sRGB and Display P3 transform matrix mBt2020ToSrgb = mXyzToSrgb * mBt2020ToXyz; mBt2020ToDisplayP3 = mXyzToDisplayP3 * mBt2020ToXyz; } ... // 涉及到有模糊的layer,具体场景再分析 if (args.supportsBackgroundBlur) { mBlurFilter = new BlurFilter(*this); checkErrors("BlurFilter creation"); } // 创建ImageManager 线程,这个线程是管理输入的mEGLImage mImageManager = std::make_unique(this); mImageManager->initThread(); //创建GLFramebuffer mDrawingBuffer = createFramebuffer(); ... } 文件:frameworks/native/libs/renderengine/gl/GLFramebuffer.cpp // 创建了一个纹理ID mTextureName,和 fb ID mFramebufferName GLFramebuffer::GLFramebuffer(GLESRenderEngine& engine) : mEngine(engine), mEGLDisplay(engine.getEGLDisplay()), mEGLImage(EGL_NO_IMAGE_KHR) { glGenTextures(1, &mTextureName); glGenFramebuffers(1, &mFramebufferName); }通过上述的代码我们可以看到在启动之初就搭建好了EGL环境,并将当前线程与context绑定,为后面使用gl命令做好准备,然后创建了ImageManager 线程,这个线程是管理输入Buffer的EGLImage,然后创建了GLFrameBuffer,用来操作输出的buffer。

并且有一点我们需要特别注意,在在创建BufferQueueLayer时就已经对各个layer创建了纹理ID,为后面走GPU合成做准备。如下:

文件:frameworks/native/services/surfaceflinger/SurfaceFlinger.cpp status_t SurfaceFlinger::createBufferQueueLayer(const sp& client, std::string name, uint32_t w, uint32_t h, uint32_t flags, LayerMetadata metadata, PixelFormat& format, sp* handle, sp* gbp, sp* outLayer) { ... args.textureName = getNewTexture(); ... } uint32_t SurfaceFlinger::getNewTexture() { { std::lock_guard lock(mTexturePoolMutex); if (!mTexturePool.empty()) { uint32_t name = mTexturePool.back(); mTexturePool.pop_back(); ATRACE_INT("TexturePoolSize", mTexturePool.size()); return name; } // The pool was too small, so increase it for the future ++mTexturePoolSize; } // The pool was empty, so we need to get a new texture name directly using a // blocking call to the main thread // 每个layer,调用glGenTextures 生成纹理ID,schedule运行在sf主线程 return schedule([this] { uint32_t name = 0; getRenderEngine().genTextures(1, &name); return name; }) .get(); }三.创建与初始化FramebufferSurface的流程

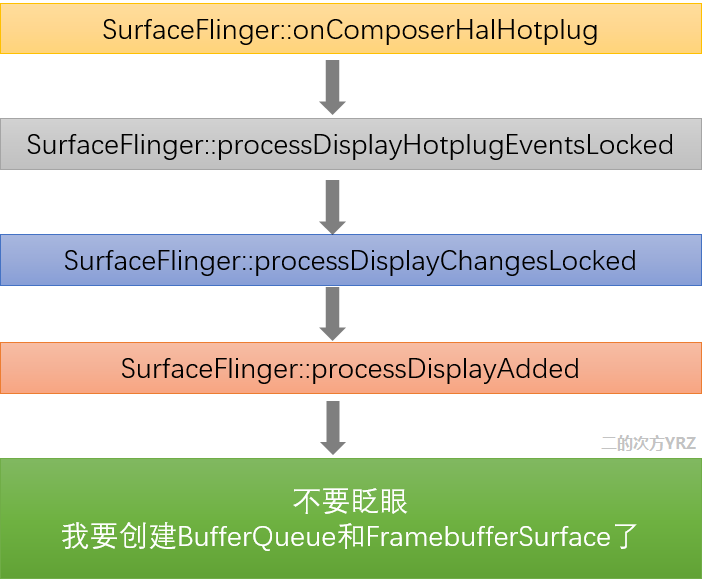

FramebufferSurface的初始化逻辑需要从SurfaceFlinger的初始化谈起,我们知道在SurfaceFlinger::init()中会去注册HWC的回调函数mCompositionEngine->getHwComposer().setCallback(this),当第一次注册callback时,onComposerHalHotplug()会立即在调用registerCallback()的线程中被调用,并跨进程回调到SurfaceFlinger::onComposerHalHotplug。然后一路飞奔:

在SurfaceFlinger::processDisplayAdded这个方法中去创建了BufferQueue和FramebufferSurface,简单理解为连接上了显示屏幕(Display),那就要给准备一个BufferQueue,以便GPU合成UI等图层时,可以向这个BufferQueue索要GraphicBuffer来存储合成后的图形数据,再呈现到屏幕上去(我的傻瓜式理解)

摘取关键代码如下:

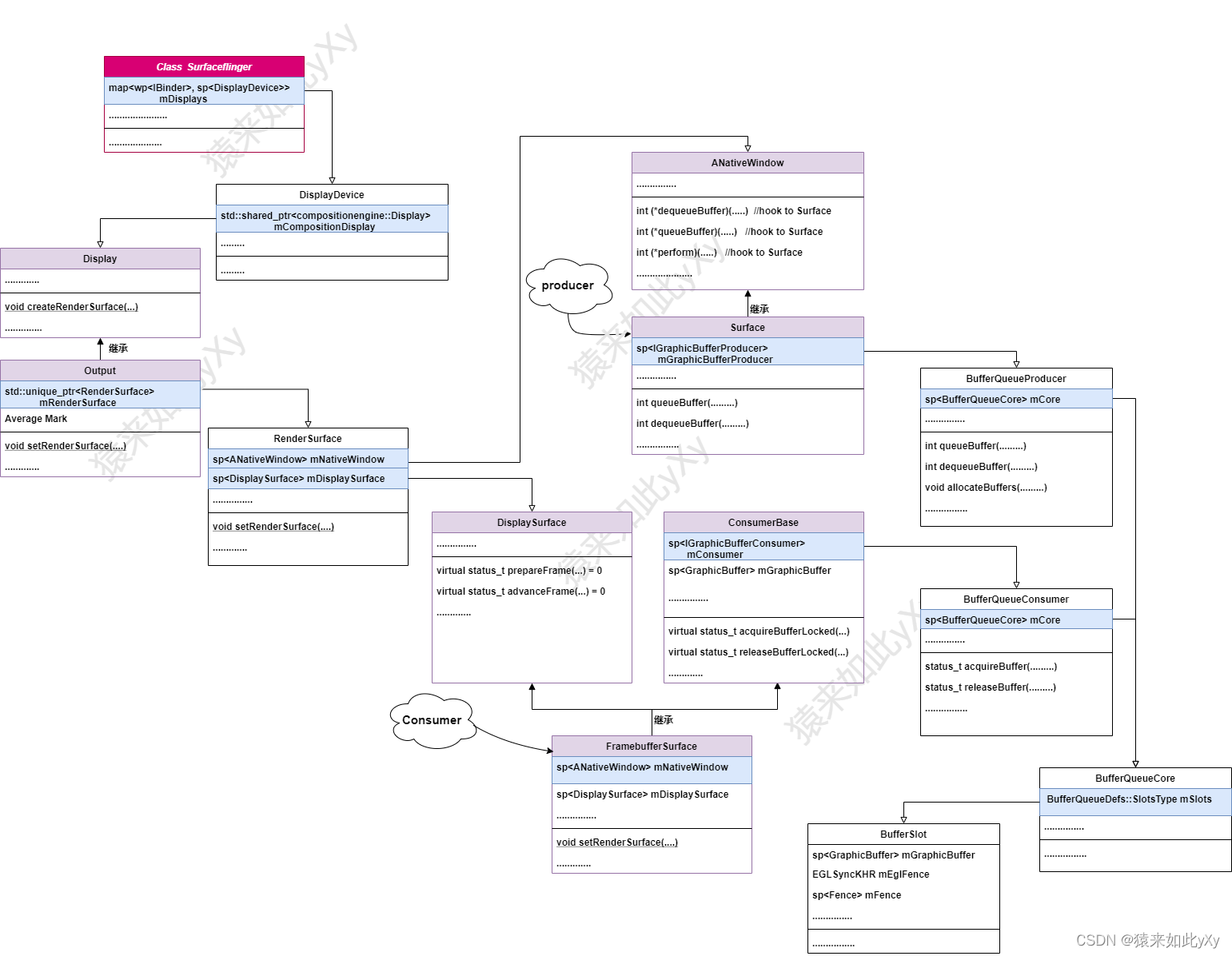

[/frameworks/native/services/surfaceflinger/SurfaceFlinger.cpp] void SurfaceFlinger::processDisplayAdded(const wp& displayToken, const DisplayDeviceState& state) { ...... sp displaySurface; sp producer; // 创建BufferQueue,获取到生产者和消费者,而且消费者不是SurfaceFlinger哦 sp bqProducer; sp bqConsumer; getFactory().createBufferQueue(&bqProducer, &bqConsumer, /*consumerIsSurfaceFlinger =*/false); if (state.isVirtual()) { // 虚拟屏幕,不管它 const auto displayId = VirtualDisplayId::tryCast(compositionDisplay->getId()); LOG_FATAL_IF(!displayId); auto surface = sp::make(getHwComposer(), *displayId, state.surface, bqProducer, bqConsumer, state.displayName); displaySurface = surface; producer = std::move(surface); } else { // 看这个case ALOGE_IF(state.surface != nullptr, "adding a supported display, but rendering " "surface is provided (%p), ignoring it", state.surface.get()); const auto displayId = PhysicalDisplayId::tryCast(compositionDisplay->getId()); LOG_FATAL_IF(!displayId); // 创建了FramebufferSurface对象,FramebufferSurface继承自compositionengine::DisplaySurface // FramebufferSurface是作为消费者的角色工作的,消费SF GPU合成后的图形数据 displaySurface = sp::make(getHwComposer(), *displayId, bqConsumer, state.physical->activeMode->getSize(), ui::Size(maxGraphicsWidth, maxGraphicsHeight)); producer = bqProducer; } LOG_FATAL_IF(!displaySurface); // 创建DisplayDevice,其又去创建RenderSurface,作为生产者角色工作,displaySurface就是FramebufferSurface对象 const auto display = setupNewDisplayDeviceInternal(displayToken, std::move(compositionDisplay), state, displaySurface, producer); mDisplays.emplace(displayToken, display); ...... }瞅一瞅 FramebufferSuraface的构造函数,没啥复杂的,就是一些设置,初始化一些成员。

FramebufferSurface::FramebufferSurface(HWComposer& hwc, PhysicalDisplayId displayId, const sp& consumer, const ui::Size& size, const ui::Size& maxSize) : ConsumerBase(consumer), mDisplayId(displayId), mMaxSize(maxSize), mCurrentBufferSlot(-1), mCurrentBuffer(), mCurrentFence(Fence::NO_FENCE), mHwc(hwc), mHasPendingRelease(false), mPreviousBufferSlot(BufferQueue::INVALID_BUFFER_SLOT), mPreviousBuffer() { ALOGV("Creating for display %s", to_string(displayId).c_str()); mName = "FramebufferSurface"; mConsumer->setConsumerName(mName); // 设置消费者的名字是 "FramebufferSurface" mConsumer->setConsumerUsageBits(GRALLOC_USAGE_HW_FB | // 设置usage GRALLOC_USAGE_HW_RENDER | GRALLOC_USAGE_HW_COMPOSER); const auto limitedSize = limitSize(size); mConsumer->setDefaultBufferSize(limitedSize.width, limitedSize.height); // 设置buffer 大小 mConsumer->setMaxAcquiredBufferCount( SurfaceFlinger::maxFrameBufferAcquiredBuffers - 1); }再进到SurfaceFlinger::setupNewDisplayDeviceInternal中看看相关的逻辑:

[/frameworks/native/services/surfaceflinger/SurfaceFlinger.cpp] sp SurfaceFlinger::setupNewDisplayDeviceInternal( const wp& displayToken, std::shared_ptr compositionDisplay, const DisplayDeviceState& state, const sp& displaySurface, const sp& producer) { ...... creationArgs.displaySurface = displaySurface; // displaySurface就是FramebufferSurface对象 // producer是前面processDisplayAdded中创建的 auto nativeWindowSurface = getFactory().createNativeWindowSurface(producer); auto nativeWindow = nativeWindowSurface->getNativeWindow(); creationArgs.nativeWindow = nativeWindow; .... // 前面一大坨代码是在初始话creationArgs,这些参数用来创建DisplayDevice // creationArgs.nativeWindow会把前面创建的producer关联到了DisplayDevice sp display = getFactory().createDisplayDevice(creationArgs); // 后面一大坨,对display进行了些设置 if (!state.isVirtual()) { display->setActiveMode(state.physical->activeMode->getId()); display->setDeviceProductInfo(state.physical->deviceProductInfo); } .... }接下来就是 DisplayDevice 的构造函数了,里面主要是创建了RenderSurface对象,然后对其进行初始化

[/frameworks/native/services/surfaceflinger/DisplayDevice.cpp] DisplayDevice::DisplayDevice(DisplayDeviceCreationArgs& args) : mFlinger(args.flinger), mHwComposer(args.hwComposer), mDisplayToken(args.displayToken), mSequenceId(args.sequenceId), mConnectionType(args.connectionType), mCompositionDisplay{args.compositionDisplay}, mPhysicalOrientation(args.physicalOrientation), mSupportedModes(std::move(args.supportedModes)), mIsPrimary(args.isPrimary) { mCompositionDisplay->editState().isSecure = args.isSecure; // 创建RenderSurface,args.nativeWindow 即为producer,指向生产者 mCompositionDisplay->createRenderSurface( compositionengine::RenderSurfaceCreationArgsBuilder() .setDisplayWidth(ANativeWindow_getWidth(args.nativeWindow.get())) .setDisplayHeight(ANativeWindow_getHeight(args.nativeWindow.get())) .setNativeWindow(std::move(args.nativeWindow)) .setDisplaySurface(std::move(args.displaySurface)) // displaySurface就是FramebufferSurface对象 .setMaxTextureCacheSize( static_cast(SurfaceFlinger::maxFrameBufferAcquiredBuffers)) .build()); if (!mFlinger->mDisableClientCompositionCache && SurfaceFlinger::maxFrameBufferAcquiredBuffers > 0) { mCompositionDisplay->createClientCompositionCache( static_cast(SurfaceFlinger::maxFrameBufferAcquiredBuffers)); } mCompositionDisplay->createDisplayColorProfile( compositionengine::DisplayColorProfileCreationArgs{args.hasWideColorGamut, std::move(args.hdrCapabilities), args.supportedPerFrameMetadata, args.hwcColorModes}); if (!mCompositionDisplay->isValid()) { ALOGE("Composition Display did not validate!"); } // 初始化RenderSurface mCompositionDisplay->getRenderSurface()->initialize(); setPowerMode(args.initialPowerMode); // initialize the display orientation transform. setProjection(ui::ROTATION_0, Rect::INVALID_RECT, Rect::INVALID_RECT); }RenderSurface作为生产者的角色工作,构造函数如下,留意启成员displaySurface就是SurfaceFlinger中创建的FramebufferSurface对象

也就是 作为生产者的RenderSurface中持有 消费者的引用 displaySurface,可以呼叫FramebufferSurface的方法。

[ /frameworks/native/services/surfaceflinger/CompositionEngine/src/RenderSurface.cpp] RenderSurface::RenderSurface(const CompositionEngine& compositionEngine, Display& display, const RenderSurfaceCreationArgs& args) : mCompositionEngine(compositionEngine), mDisplay(display), mNativeWindow(args.nativeWindow), mDisplaySurface(args.displaySurface), // displaySurface就是FramebufferSurface对象 mSize(args.displayWidth, args.displayHeight), mMaxTextureCacheSize(args.maxTextureCacheSize) { LOG_ALWAYS_FATAL_IF(!mNativeWindow); }我们看看他的RenderSurface::initialize()方法

[/frameworks/native/services/surfaceflinger/CompositionEngine/src/RenderSurface.cpp] void RenderSurface::initialize() { ANativeWindow* const window = mNativeWindow.get(); int status = native_window_api_connect(window, NATIVE_WINDOW_API_EGL); ALOGE_IF(status != NO_ERROR, "Unable to connect BQ producer: %d", status); status = native_window_set_buffers_format(window, HAL_PIXEL_FORMAT_RGBA_8888); ALOGE_IF(status != NO_ERROR, "Unable to set BQ format to RGBA888: %d", status); status = native_window_set_usage(window, DEFAULT_USAGE); ALOGE_IF(status != NO_ERROR, "Unable to set BQ usage bits for GPU rendering: %d", status); }上述方法也很简单,就是作为producer去和BufferQueue建立connect,并设置format为RGBA_8888,设置usage为GRALLOC_USAGE_HW_RENDER | GRALLOC_USAGE_HW_TEXTURE

为了验证上述分析的流程是正确的,我在BufferQueueProducer::connect中加log来打印调用栈的信息,如下,是不是和分析的一样啊

11-13 00:52:58.497 227 227 D BufferQueueProducer: connect[1303] /vendor/bin/hw/android.hardware.graphics.composer@2.4-service start 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#00 pc 0005e77f /system/lib/libgui.so (android::BufferQueueProducer::connect(android::sp const&, int, bool, android::IGraphicBufferProducer::QueueBufferOutput*)+1282) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#01 pc 000a276b /system/lib/libgui.so (android::Surface::connect(int, android::sp const&, bool)+138) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#02 pc 0009de41 /system/lib/libgui.so (android::Surface::hook_perform(ANativeWindow*, int, ...)+128) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#03 pc 00121b1d /system/bin/surfaceflinger (android::compositionengine::impl::RenderSurface::initialize()+12) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#04 pc 00083cc5 /system/bin/surfaceflinger (android::DisplayDevice::DisplayDevice(android::DisplayDeviceCreationArgs&)+1168) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#05 pc 000d8bed /system/bin/surfaceflinger (android::SurfaceFlinger::processDisplayAdded(android::wp const&, android::DisplayDeviceState const&)+4440) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#06 pc 000d0db5 /system/bin/surfaceflinger (android::SurfaceFlinger::processDisplayChangesLocked()+2436) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#07 pc 000cef6b /system/bin/surfaceflinger (android::SurfaceFlinger::processDisplayHotplugEventsLocked()+6422) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#08 pc 000d2c7f /system/bin/surfaceflinger (android::SurfaceFlinger::onComposerHalHotplug(unsigned long long, android::hardware::graphics::composer::V2_1::IComposerCallback::Connection)+334) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#09 pc 0009afab /system/bin/surfaceflinger (_ZN7android12_GLOBAL__N_122ComposerCallbackBridge9onHotplugEyNS_8hardware8graphics8composer4V2_117IComposerCallback10ConnectionE$d689f7ac1c60e4abeed02ca92a51bdcd+20) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#10 pc 0001bb97 /system/lib/android.hardware.graphics.composer@2.1.so (android::hardware::graphics::composer::V2_1::BnHwComposerCallback::_hidl_onHotplug(android::hidl::base::V1_0::BnHwBase*, android::hardware::Parcel const&, android::hardware::Parcel*, std::__1::function)+166) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#11 pc 000275e9 /system/lib/android.hardware.graphics.composer@2.4.so (android::hardware::graphics::composer::V2_4::BnHwComposerCallback::onTransact(unsigned int, android::hardware::Parcel const&, android::hardware::Parcel*, unsigned int, std::__1::function)+228) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#12 pc 00054779 /system/lib/libhidlbase.so (android::hardware::BHwBinder::transact(unsigned int, android::hardware::Parcel const&, android::hardware::Parcel*, unsigned int, std::__1::function)+96) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#13 pc 0004fc67 /system/lib/libhidlbase.so (android::hardware::IPCThreadState::transact(int, unsigned int, android::hardware::Parcel const&, android::hardware::Parcel*, unsigned int)+2174) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#14 pc 0004f2e5 /system/lib/libhidlbase.so (android::hardware::BpHwBinder::transact(unsigned int, android::hardware::Parcel const&, android::hardware::Parcel*, unsigned int, std::__1::function)+36) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#15 pc 0002bdf1 /system/lib/android.hardware.graphics.composer@2.4.so (android::hardware::graphics::composer::V2_4::BpHwComposerClient::_hidl_registerCallback_2_4(android::hardware::IInterface*, android::hardware::details::HidlInstrumentor*, android::sp const&)+296) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#16 pc 0002ed8d /system/lib/android.hardware.graphics.composer@2.4.so (android::hardware::graphics::composer::V2_4::BpHwComposerClient::registerCallback_2_4(android::sp const&)+34) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#17 pc 00085627 /system/bin/surfaceflinger (android::Hwc2::impl::Composer::registerCallback(android::sp const&)+98) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#18 pc 00092d63 /system/bin/surfaceflinger (android::impl::HWComposer::setCallback(android::HWC2::ComposerCallback*)+2206) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#19 pc 000cd35b /system/bin/surfaceflinger (android::SurfaceFlinger::init()+438) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#20 pc 000feb03 /system/bin/surfaceflinger (main+862) 11-13 00:52:58.581 227 227 E BufferQueueProducer: stackdump:#21 pc 0003253b /apex/com.android.runtime/lib/bionic/libc.so (__libc_init+54) 11-13 00:52:58.582 227 227 D BufferQueueProducer: connect[1307] /vendor/bin/hw/android.hardware.graphics.composer@2.4-service end

这里有一个小细节要留意下,因为SurfaceFlinger::onComposerHalHotplug是HWC回调过来的,所以代码执行是在android.hardware.graphics.composer@2.4-service这个进程中的。

BufferQueueProducer::connect中记录的mConnectedPid就是composer service的PID

[ /frameworks/native/libs/gui/BufferQueueProducer.cpp] mCore->mConnectedPid = BufferQueueThreadState::getCallingPid();

在dump BufferQueue的信息时,根据PID获取的 producer name 也就是 android.hardware.graphics.composer@2.4-service

[/frameworks/native/libs/gui/BufferQueueCore.cpp] void BufferQueueCore::dumpState(const String8& prefix, String8* outResult) const { ... getProcessName(mConnectedPid, producerProcName); getProcessName(pid, consumerProcName); .... }如下是我的平台dumpsys SurfaceFlinger的信息打印出来的Composition RenderSurface State的信息,看看是不是和代码的设置都有对应起来:

mConsumerName=FramebufferSurface producer=[342:/vendor/bin/hw/android.hardware.graphics.composer@2.4-service] consumer=[223:/system/bin/surfaceflinger])

format/size/usage也都可以对应到代码的设置

Composition RenderSurface State: size=[1920 1080] ANativeWindow=0xef2c3278 (format 1) flips=605 FramebufferSurface: dataspace: Default(0) mAbandoned=0 - BufferQueue mMaxAcquiredBufferCount=2 mMaxDequeuedBufferCount=1 mDequeueBufferCannotBlock=0 mAsyncMode=0 mQueueBufferCanDrop=0 mLegacyBufferDrop=1 default-size=[1920x1080] default-format=1 transform-hint=00 frame-counter=580 mTransformHintInUse=00 mAutoPrerotation=0 FIFO(0): (mConsumerName=FramebufferSurface, mConnectedApi=1, mConsumerUsageBits=6656, mId=df00000000, producer=[342:/vendor/bin/hw/android.hardware.graphics.composer@2.4-service], consumer=[223:/system/bin/surfaceflinger]) Slots: >[01:0xeec82110] state=ACQUIRED 0xef4429c0 frame=2 [1920x1080:1920, 1] >[02:0xeec806f0] state=ACQUIRED 0xef443100 frame=580 [1920x1080:1920, 1] [00:0xeec81f00] state=FREE 0xef440580 frame=579 [1920x1080:1920, 1]四.关于RenderSurface和FramebufferSurface小结

上述内容中出现的一些字眼,不禁令人”瞎想连篇“

SurfaceFlinger创建了BufferQueue ==> Producer & Consumer

创建了RenderSurface作为生产者,它持有Producer

创建了FramebufferSurface作为消费者,它持有Consumer

前面分析BufferQueue的工作原理时,有讲过:

生产者不断的dequeueBuffer & queueBuffer ; 而消费者不断的acquireBuffer & releaseBuffer ,这样图像缓存就在 生产者 – BufferQueue – 消费者 间流转起来了。

看看作为生产者的RenderSurface中方法:

[/frameworks/native/services/surfaceflinger/CompositionEngine/include/compositionengine/RenderSurface.h] /** * Encapsulates everything for composing to a render surface with RenderEngine */ class RenderSurface { .... // Allocates a buffer as scratch space for GPU composition virtual std::shared_ptr dequeueBuffer( base::unique_fd* bufferFence) = 0; // Queues the drawn buffer for consumption by HWC. readyFence is the fence // which will fire when the buffer is ready for consumption. virtual void queueBuffer(base::unique_fd readyFence) = 0; ... };熟悉的味道:

dequeueBuffer : 分配一个缓冲区作为GPU合成的暂存空间

queueBuffer : 入队列已绘制好的图形缓存供HWC使用

同样如果去查看作为消费者的FramebufferSurface也会看到acquireBuffer & releaseBuffer的调用,如下:

[/frameworks/native/services/surfaceflinger/DisplayHardware/FramebufferSurface.cpp] status_t FramebufferSurface::nextBuffer(uint32_t& outSlot, sp& outBuffer, sp& outFence, Dataspace& outDataspace) { Mutex::Autolock lock(mMutex); BufferItem item; status_t err = acquireBufferLocked(&item, 0); // 获取待显示的buffer ... status_t result = mHwc.setClientTarget(mDisplayId, outSlot, outFence, outBuffer, outDataspace); // 传递给HWC进一步处理显示 return NO_ERROR; }所以,最后我们大概会有这样一种逻辑处理流程:

-

当需要GPU合成时,会通过生产者RenderSurface::dequeueBuffer请求一块图形缓存,然后GPU就合成/绘图,把数据保存到这块图形缓存中,通过RenderSurface::queueBuffer提交这块缓存

-

调用mDisplaySurface->advanceFrame()通知消费者来消费:

FramebufferSurface::advanceFrame ==>FramebufferSurface::nextBuffer ==> acquireBufferLocked

-

去请求可用的图形缓存,这个buffer中存储有GPU合成的结果,然后通过setClientTarget把这个buffer传递给HWC做处理显示。

五.SF处理GPU合成流程分析

还记得我们前面分析到的Output::prepareFrame吗,其如果存在GPU合成,会执行如下的相关逻辑:

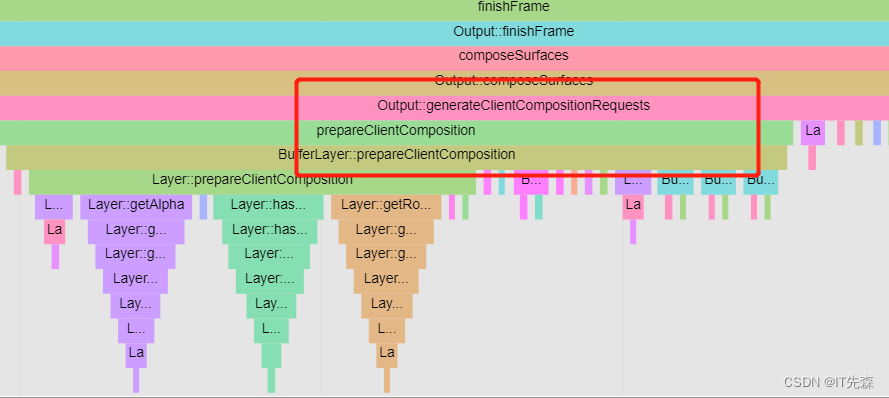

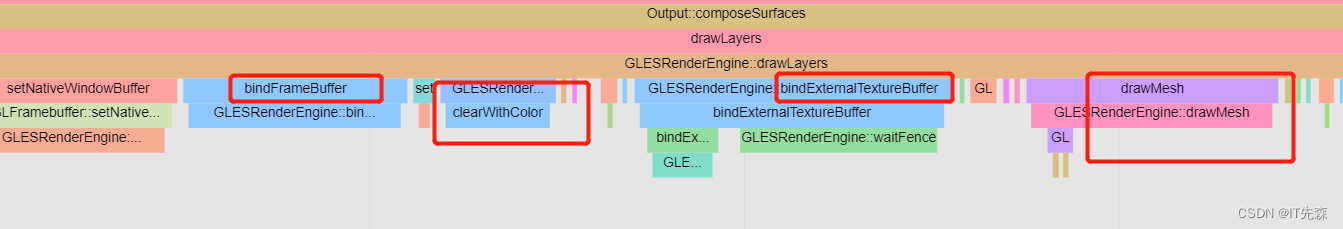

Output::prepareFrame() Display::chooseCompositionStrategy Output::chooseCompositionStrategy() hwc.getDeviceCompositionChanges status_t HWComposer::getDeviceCompositionChanges( DisplayId displayId, bool frameUsesClientComposition, std::optional* outChanges) { ... if (!frameUsesClientComposition) { sp outPresentFence; uint32_t state = UINT32_MAX; /** * @brief * 如果所有的layer都能走device合成 * 则在hwc里面直接present,若有不支持 * device合成的情况,则走GPU合成,会走validate逻辑 */ error = hwcDisplay->presentOrValidate(&numTypes, &numRequests, &outPresentFence , &state); if (!hasChangesError(error)) { RETURN_IF_HWC_ERROR_FOR("presentOrValidate", error, displayId, UNKNOWN_ERROR); } if (state == 1) { //Present Succeeded. //present成功,数据直接提交给了hwc std::unordered_map releaseFences; error = hwcDisplay->getReleaseFences(&releaseFences); displayData.releaseFences = std::move(releaseFences); displayData.lastPresentFence = outPresentFence; displayData.validateWasSkipped = true; displayData.presentError = error; return NO_ERROR; } // Present failed but Validate ran. } else { error = hwcDisplay->validate(&numTypes, &numRequests); } ALOGV("SkipValidate failed, Falling back to SLOW validate/present"); if (!hasChangesError(error)) { RETURN_IF_HWC_ERROR_FOR("validate", error, displayId, BAD_INDEX); } android::HWComposer::DeviceRequestedChanges::ChangedTypes changedTypes; changedTypes.reserve(numTypes); error = hwcDisplay->getChangedCompositionTypes(&changedTypes); RETURN_IF_HWC_ERROR_FOR("getChangedCompositionTypes", error, displayId, BAD_INDEX); auto displayRequests = static_cast(0); android::HWComposer::DeviceRequestedChanges::LayerRequests layerRequests; layerRequests.reserve(numRequests); error = hwcDisplay->getRequests(&displayRequests, &layerRequests); RETURN_IF_HWC_ERROR_FOR("getRequests", error, displayId, BAD_INDEX); DeviceRequestedChanges::ClientTargetProperty clientTargetProperty; error = hwcDisplay->getClientTargetProperty(&clientTargetProperty); outChanges->emplace(DeviceRequestedChanges{std::move(changedTypes), std::move(displayRequests), std::move(layerRequests), std::move(clientTargetProperty)}); //接收hwc反馈回来的,主要是支持device和gpu合成的情况 error = hwcDisplay->acceptChanges(); RETURN_IF_HWC_ERROR_FOR("acceptChanges", error, displayId, BAD_INDEX); return NO_ERROR; }前面我们也分析到了Output::finishFrame,其中的composeSurfaces是GPU合成的核心:

void Output::finishFrame(const compositionengine::CompositionRefreshArgs& refreshArgs) { ... auto optReadyFence = composeSurfaces(Region::INVALID_REGION, refreshArgs); if (!optReadyFence) { return; } // swap buffers (presentation) mRenderSurface->queueBuffer(std::move(*optReadyFence)); }5.1 Output::composeSurfaces

这里我们先重点来看composeSurfaces这个函数,看下走GPU合成的逻辑:

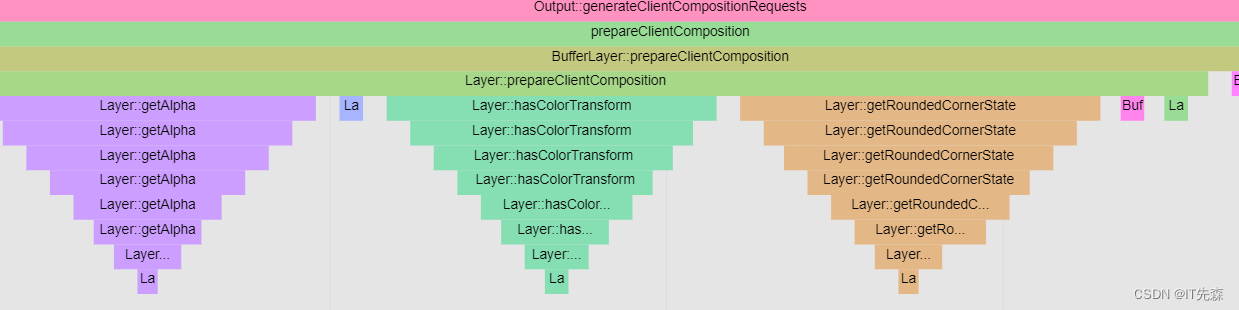

文件:frameworks/native/services/surfaceflinger/CompositionEngine/src/Output.cpp std::optional Output::composeSurfaces( const Region& debugRegion, const compositionengine::CompositionRefreshArgs& refreshArgs) { ... base::unique_fd fd; sp buf; // If we aren't doing client composition on this output, but do have a // flipClientTarget request for this frame on this output, we still need to // dequeue a buffer. if (hasClientComposition || outputState.flipClientTarget) { // dequeueBuffer一块Buffer,这块Buffer作为输出 buf = mRenderSurface->dequeueBuffer(&fd); if (buf == nullptr) { ALOGW("Dequeuing buffer for display [%s] failed, bailing out of " "client composition for this frame", mName.c_str()); return {}; } } base::unique_fd readyFence; // GPU合成时不返回 if (!hasClientComposition) { setExpensiveRenderingExpected(false); return readyFence; } ALOGV("hasClientComposition"); // 设置clientCompositionDisplay,这个是display相关参数 renderengine::DisplaySettings clientCompositionDisplay; clientCompositionDisplay.physicalDisplay = outputState.destinationClip; clientCompositionDisplay.clip = outputState.sourceClip; clientCompositionDisplay.orientation = outputState.orientation; clientCompositionDisplay.outputDataspace = mDisplayColorProfile->hasWideColorGamut() ? outputState.dataspace : ui::Dataspace::UNKNOWN; clientCompositionDisplay.maxLuminance = mDisplayColorProfile->getHdrCapabilities().getDesiredMaxLuminance(); // Compute the global color transform matrix. if (!outputState.usesDeviceComposition && !getSkipColorTransform()) { clientCompositionDisplay.colorTransform = outputState.colorTransformMatrix; } // Note: Updated by generateClientCompositionRequests clientCompositionDisplay.clearRegion = Region::INVALID_REGION; // Generate the client composition requests for the layers on this output. // 设置clientCompositionLayers , 这个是layer的相关参数 std::vector clientCompositionLayers = generateClientCompositionRequests(supportsProtectedContent, clientCompositionDisplay.clearRegion, clientCompositionDisplay.outputDataspace); appendRegionFlashRequests(debugRegion, clientCompositionLayers); // Check if the client composition requests were rendered into the provided graphic buffer. If // so, we can reuse the buffer and avoid client composition. // 如果cache里有相同的Buffer,则不需要重复draw一次 if (mClientCompositionRequestCache) { if (mClientCompositionRequestCache->exists(buf->getId(), clientCompositionDisplay, clientCompositionLayers)) { outputCompositionState.reusedClientComposition = true; setExpensiveRenderingExpected(false); return readyFence; } mClientCompositionRequestCache->add(buf->getId(), clientCompositionDisplay, clientCompositionLayers); } // We boost GPU frequency here because there will be color spaces conversion // or complex GPU shaders and it's expensive. We boost the GPU frequency so that // GPU composition can finish in time. We must reset GPU frequency afterwards, // because high frequency consumes extra battery. // 针对有模糊layer和有复杂颜色空间转换的场景,给GPU进行提频 const bool expensiveBlurs = refreshArgs.blursAreExpensive && mLayerRequestingBackgroundBlur != nullptr; const bool expensiveRenderingExpected = clientCompositionDisplay.outputDataspace == ui::Dataspace::DISPLAY_P3 || expensiveBlurs; if (expensiveRenderingExpected) { setExpensiveRenderingExpected(true); } // 将clientCompositionLayers 里面的内容插入到clientCompositionLayerPointers,实质内容相同 std::vector clientCompositionLayerPointers; clientCompositionLayerPointers.reserve(clientCompositionLayers.size()); std::transform(clientCompositionLayers.begin(), clientCompositionLayers.end(), std::back_inserter(clientCompositionLayerPointers), [](LayerFE::LayerSettings& settings) -> renderengine::LayerSettings* { return &settings; }); const nsecs_t renderEngineStart = systemTime(); // GPU合成,主要逻辑在drawLayers里面 status_t status = renderEngine.drawLayers(clientCompositionDisplay, clientCompositionLayerPointers, buf->getNativeBuffer(), /*useFramebufferCache=*/true, std::move(fd), &readyFence); ... } std::vector Output::generateClientCompositionRequests( bool supportsProtectedContent, Region& clearRegion, ui::Dataspace outputDataspace) { std::vector clientCompositionLayers; ALOGV("Rendering client layers"); const auto& outputState = getState(); const Region viewportRegion(outputState.viewport); const bool useIdentityTransform = false; bool firstLayer = true; // Used when a layer clears part of the buffer. Region dummyRegion; for (auto* layer : getOutputLayersOrderedByZ()) { const auto& layerState = layer->getState(); const auto* layerFEState = layer->getLayerFE().getCompositionState(); auto& layerFE = layer->getLayerFE(); const Region clip(viewportRegion.intersect(layerState.visibleRegion)); ALOGV("Layer: %s", layerFE.getDebugName()); if (clip.isEmpty()) { ALOGV(" Skipping for empty clip"); firstLayer = false; continue; } const bool clientComposition = layer->requiresClientComposition(); // We clear the client target for non-client composed layers if // requested by the HWC. We skip this if the layer is not an opaque // rectangle, as by definition the layer must blend with whatever is // underneath. We also skip the first layer as the buffer target is // guaranteed to start out cleared. const bool clearClientComposition = layerState.clearClientTarget && layerFEState->isOpaque && !firstLayer; ALOGV(" Composition type: client %d clear %d", clientComposition, clearClientComposition); // If the layer casts a shadow but the content casting the shadow is occluded, skip // composing the non-shadow content and only draw the shadows. const bool realContentIsVisible = clientComposition && !layerState.visibleRegion.subtract(layerState.shadowRegion).isEmpty(); if (clientComposition || clearClientComposition) { compositionengine::LayerFE::ClientCompositionTargetSettings targetSettings{ clip, useIdentityTransform, layer->needsFiltering() || outputState.needsFiltering, outputState.isSecure, supportsProtectedContent, clientComposition ? clearRegion : dummyRegion, outputState.viewport, outputDataspace, realContentIsVisible, !clientComposition, /* clearContent */ }; std::vector results = layerFE.prepareClientCompositionList(targetSettings); if (realContentIsVisible && !results.empty()) { layer->editState().clientCompositionTimestamp = systemTime(); } clientCompositionLayers.insert(clientCompositionLayers.end(), std::make_move_iterator(results.begin()), std::make_move_iterator(results.end())); results.clear(); } firstLayer = false; } return clientCompositionLayers; }输入的Buffer是通过BufferLayer的prepareClientComposition 函数设到RenderEngine里面的,如下:

文件:frameworks/native/services/surfaceflinger/BufferLayer.cpp std::optional BufferLayer::prepareClientComposition( compositionengine::LayerFE::ClientCompositionTargetSettings& targetSettings) { ATRACE_CALL(); std::optional result = Layer::prepareClientComposition(targetSettings); ... const State& s(getDrawingState()); // 应用queue过来的Buffer layer.source.buffer.buffer = mBufferInfo.mBuffer; layer.source.buffer.isOpaque = isOpaque(s); // acquire fence layer.source.buffer.fence = mBufferInfo.mFence; // 创建BufferQueueLayer时创建的texture ID layer.source.buffer.textureName = mTextureName; ... }至此,SurfaceFlinger调到RenderEngine里面,SurfaceFlinger的display和outputlayer的信息传到了RenderEngine,这些都是GPU合成需要的信息,然后来看下drawLayers的流程。

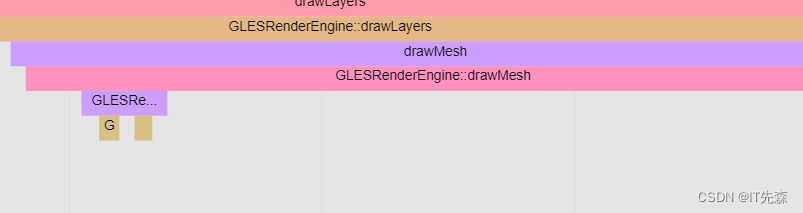

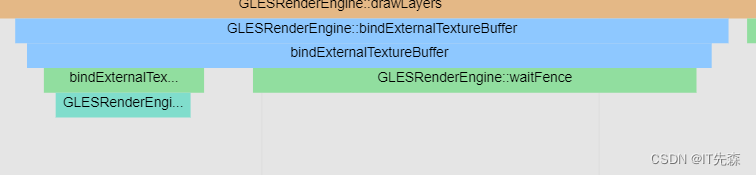

5.2 GLESRenderEngine::drawLayers

文件:frameworks/native/libs/renderengine/gl/GLESRenderEngine.cpp status_t GLESRenderEngine::drawLayers(const DisplaySettings& display, const std::vector& layers, ANativeWindowBuffer* const buffer, const bool useFramebufferCache, base::unique_fd&& bufferFence, base::unique_fd* drawFence) { ATRACE_CALL(); if (layers.empty()) { ALOGV("Drawing empty layer stack"); return NO_ERROR; } // 要等前一帧的release fence if (bufferFence.get() >= 0) { // Duplicate the fence for passing to waitFence. base::unique_fd bufferFenceDup(dup(bufferFence.get())); if (bufferFenceDup setNativeWindowBuffer(buffer, mEngine.isProtected(), useFramebufferCache) ? mEngine.bindFrameBuffer(mFramebuffer) : NO_MEMORY; } ~BindNativeBufferAsFramebuffer() { mFramebuffer->setNativeWindowBuffer(nullptr, false, /*arbitrary*/ true); mEngine.unbindFrameBuffer(mFramebuffer); } status_t getStatus() const { return mStatus; } private: RenderEngine& mEngine; Framebuffer* mFramebuffer; status_t mStatus; }; 文件: frameworks/native/libs/renderengine/gl/GLFramebuffer.cpp bool GLFramebuffer::setNativeWindowBuffer(ANativeWindowBuffer* nativeBuffer, bool isProtected, const bool useFramebufferCache) { ATRACE_CALL(); if (mEGLImage != EGL_NO_IMAGE_KHR) { if (!usingFramebufferCache) { eglDestroyImageKHR(mEGLDisplay, mEGLImage); DEBUG_EGL_IMAGE_TRACKER_DESTROY(); } mEGLImage = EGL_NO_IMAGE_KHR; mBufferWidth = 0; mBufferHeight = 0; } if (nativeBuffer) { mEGLImage = mEngine.createFramebufferImageIfNeeded(nativeBuffer, isProtected, useFramebufferCache); if (mEGLImage == EGL_NO_IMAGE_KHR) { return false; } usingFramebufferCache = useFramebufferCache; mBufferWidth = nativeBuffer->width; mBufferHeight = nativeBuffer->height; } return true; } 文件:frameworks/native/libs/renderengine/gl/GLESRenderEngine.cpp GLImageKHR GLESRenderEngine::createFramebufferImageIfNeeded(ANativeWindowBuffer* nativeBuffer, bool isProtected, bool useFramebufferCache) { // buffer类型转换,将ANativeWindowBuffer 转换成 GraphicsBuffer sp graphicBuffer = GraphicBuffer::from(nativeBuffer); //使用cache,如果有一样的image,就直接返回 if (useFramebufferCache) { std::lock_guard lock(mFramebufferImageCacheMutex); for (const auto& image : mFramebufferImageCache) { if (image.first == graphicBuffer->getId()) { return image.second; } } } EGLint attributes[] = { isProtected ? EGL_PROTECTED_CONTENT_EXT : EGL_NONE, isProtected ? EGL_TRUE : EGL_NONE, EGL_NONE, }; // 将dequeue出来的buffer作为参数创建 EGLImage EGLImageKHR image = eglCreateImageKHR(mEGLDisplay, EGL_NO_CONTEXT, EGL_NATIVE_BUFFER_ANDROID, nativeBuffer, attributes); if (useFramebufferCache) { if (image != EGL_NO_IMAGE_KHR) { std::lock_guard lock(mFramebufferImageCacheMutex); if (mFramebufferImageCache.size() >= mFramebufferImageCacheSize) { EGLImageKHR expired = mFramebufferImageCache.front().second; mFramebufferImageCache.pop_front(); eglDestroyImageKHR(mEGLDisplay, expired); DEBUG_EGL_IMAGE_TRACKER_DESTROY(); } // 把image放到mFramebufferImageCache 里面 mFramebufferImageCache.push_back({graphicBuffer->getId(), image}); } } if (image != EGL_NO_IMAGE_KHR) { DEBUG_EGL_IMAGE_TRACKER_CREATE(); } return image; } status_t GLESRenderEngine::bindFrameBuffer(Framebuffer* framebuffer) { ATRACE_CALL(); GLFramebuffer* glFramebuffer = static_cast(framebuffer); // 上一步创建的EGLImage EGLImageKHR eglImage = glFramebuffer->getEGLImage(); // 创建RenderEngine 时就已经创建好的 texture id和 fb id uint32_t textureName = glFramebuffer->getTextureName(); uint32_t framebufferName = glFramebuffer->getFramebufferName(); // Bind the texture and turn our EGLImage into a texture // 绑定texture,后面的操作将作用在这上面 glBindTexture(GL_TEXTURE_2D, textureName); // 根据EGLImage 创建一个 2D texture glEGLImageTargetTexture2DOES(GL_TEXTURE_2D, (GLeglImageOES)eglImage); // Bind the Framebuffer to render into glBindFramebuffer(GL_FRAMEBUFFER, framebufferName); // 将纹理附着在帧缓存上面,渲染到farmeBuffer glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, textureName, 0); uint32_t glStatus = glCheckFramebufferStatus(GL_FRAMEBUFFER); ALOGE_IF(glStatus != GL_FRAMEBUFFER_COMPLETE_OES, "glCheckFramebufferStatusOES error %d", glStatus); return glStatus == GL_FRAMEBUFFER_COMPLETE_OES ? NO_ERROR : BAD_VALUE; }首先将dequeue出来的buffer通过eglCreateImageKHR做成image,然后通过glEGLImageTargetTexture2DOES根据image创建一个2D的纹理,再通过glFramebufferTexture2D把纹理附着在帧缓存上面。setViewportAndProjection 设置视图和投影矩阵。

文件:frameworks/native/libs/renderengine/gl/GLESRenderEngine.cpp status_t GLESRenderEngine::drawLayers(const DisplaySettings& display, const std::vector& layers, ANativeWindowBuffer* const buffer, const bool useFramebufferCache, base::unique_fd&& bufferFence, base::unique_fd* drawFence) { ... // 设置顶点和纹理坐标的size Mesh mesh = Mesh::Builder() .setPrimitive(Mesh::TRIANGLE_FAN) .setVertices(4 /* count */, 2 /* size */) .setTexCoords(2 /* size */) .setCropCoords(2 /* size */) .build(); for (auto const layer : layers) { //遍历outputlayer ... //获取layer的大小 const FloatRect bounds = layer->geometry.boundaries; Mesh::VertexArray position(mesh.getPositionArray()); // 设置顶点的坐标,逆时针方向 position[0] = vec2(bounds.left, bounds.top); position[1] = vec2(bounds.left, bounds.bottom); position[2] = vec2(bounds.right, bounds.bottom); position[3] = vec2(bounds.right, bounds.top); //设置crop的坐标 setupLayerCropping(*layer, mesh); // 设置颜色矩阵 setColorTransform(display.colorTransform * layer->colorTransform); ... // Buffer相关设置 if (layer->source.buffer.buffer != nullptr) { disableTexture = false; isOpaque = layer->source.buffer.isOpaque; // layer的buffer,理解为输入的buffer sp gBuf = layer->source.buffer.buffer; // textureName是创建BufferQueuelayer时生成的,用来标识这个layer, // fence是acquire fence bindExternalTextureBuffer(layer->source.buffer.textureName, gBuf, layer->source.buffer.fence); ... // 设置纹理坐标,也是逆时针 renderengine::Mesh::VertexArray texCoords(mesh.getTexCoordArray()); texCoords[0] = vec2(0.0, 0.0); texCoords[1] = vec2(0.0, 1.0); texCoords[2] = vec2(1.0, 1.0); texCoords[3] = vec2(1.0, 0.0); // 设置纹理的参数,glTexParameteri setupLayerTexturing(texture); } status_t GLESRenderEngine::bindExternalTextureBuffer(uint32_t texName, const sp& buffer, const sp& bufferFence) { if (buffer == nullptr) { return BAD_VALUE; } ATRACE_CALL(); bool found = false; { // 在ImageCache里面找有没有相同的buffer std::lock_guard lock(mRenderingMutex); auto cachedImage = mImageCache.find(buffer->getId()); found = (cachedImage != mImageCache.end()); } // If we couldn't find the image in the cache at this time, then either // SurfaceFlinger messed up registering the buffer ahead of time or we got // backed up creating other EGLImages. if (!found) { //如果ImageCache里面没有则需要重新创建一个EGLImage,创建输入的EGLImage是在ImageManager线程里面,利用notify唤醒机制 status_t cacheResult = mImageManager->cache(buffer); if (cacheResult != NO_ERROR) { return cacheResult; } } ... // 把EGLImage转换成纹理,类型为GL_TEXTURE_EXTERNAL_OES bindExternalTextureImage(texName, *cachedImage->second); mTextureView.insert_or_assign(texName, buffer->getId()); } } void GLESRenderEngine::bindExternalTextureImage(uint32_t texName, const Image& image) { ATRACE_CALL(); const GLImage& glImage = static_cast(image); const GLenum target = GL_TEXTURE_EXTERNAL_OES; //绑定纹理,纹理ID为texName glBindTexture(target, texName); if (glImage.getEGLImage() != EGL_NO_IMAGE_KHR) { // 把EGLImage转换成纹理,纹理ID为texName glEGLImageTargetTexture2DOES(target, static_cast(glImage.getEGLImage())); } }至此,将输入和输出的Buffer都生成了纹理对应,以及设置了纹理的坐标和顶点的坐标,接下来就要使用shader进行绘制了。

文件:frameworks/native/libs/renderengine/gl/GLESRenderEngine.cpp void GLESRenderEngine::drawMesh(const Mesh& mesh) { ATRACE_CALL(); if (mesh.getTexCoordsSize()) { //开启顶点着色器属性,,目的是能在顶点着色器中访问顶点的属性数据 glEnableVertexAttribArray(Program::texCoords); // 给顶点着色器传纹理的坐标 glVertexAttribPointer(Program::texCoords, mesh.getTexCoordsSize(), GL_FLOAT, GL_FALSE, mesh.getByteStride(), mesh.getTexCoords()); } //给顶点着色器传顶点的坐标 glVertexAttribPointer(Program::position, mesh.getVertexSize(), GL_FLOAT, GL_FALSE, mesh.getByteStride(), mesh.getPositions()); ... // 创建顶点和片段着色器,将顶点属性设和一些常量参数设到shader里面 ProgramCache::getInstance().useProgram(mInProtectedContext ? mProtectedEGLContext : mEGLContext, managedState); ... // 调GPU去draw glDrawArrays(mesh.getPrimitive(), 0, mesh.getVertexCount()); ... } 文件:frameworks/native/libs/renderengine/gl/ProgramCache.cpp void ProgramCache::useProgram(EGLContext context, const Description& description) { //设置key值,根据不同的key值创建不同的shader Key needs(computeKey(description)); // look-up the program in the cache auto& cache = mCaches[context]; auto it = cache.find(needs); if (it == cache.end()) { // we didn't find our program, so generate one... nsecs_t time = systemTime(); // 如果cache里面没有相同的program则重新创建一个 it = cache.emplace(needs, generateProgram(needs)).first; time = systemTime() - time; ALOGV(">>> generated new program for context %p: needs=%08X, time=%u ms (%zu programs)", context, needs.mKey, uint32_t(ns2ms(time)), cache.size()); } // here we have a suitable program for this description std::unique_ptr& program = it->second; if (program->isValid()) { program->use(); program->setUniforms(description); } } std::unique_ptr ProgramCache::generateProgram(const Key& needs) { ATRACE_CALL(); // 创建顶点着色器 String8 vs = generateVertexShader(needs); // 创建片段着色器 String8 fs = generateFragmentShader(needs); // 链接和编译着色器 return std::make_unique(needs, vs.string(), fs.string()); } String8 ProgramCache::generateVertexShader(const Key& needs) { Formatter vs; if (needs.hasTextureCoords()) { // attribute属性通过glVertexAttribPointer设置,varying 表示输出给片段着色器的数据 vs vs Formatter fs; if (needs.getTextureTarget() == Key::TEXTURE_EXT) { fs fs fs fs // 输出像素的颜色值 fs // 编译顶点和片段着色器 GLuint vertexId = buildShader(vertex, GL_VERTEX_SHADER); GLuint fragmentId = buildShader(fragment, GL_FRAGMENT_SHADER); // 创建programID GLuint programId = glCreateProgram(); // 将顶点和片段着色器链接到programe glAttachShader(programId, vertexId); glAttachShader(programId, fragmentId); // 将着色器里面的属性和自定义的属性变量绑定 glBindAttribLocation(programId, position, "position"); glBindAttribLocation(programId, texCoords, "texCoords"); glBindAttribLocation(programId, cropCoords, "cropCoords"); glBindAttribLocation(programId, shadowColor, "shadowColor"); glBindAttribLocation(programId, shadowParams, "shadowParams"); glLinkProgram(programId); GLint status; glGetProgramiv(programId, GL_LINK_STATUS, &status); ... mProgram = programId; mVertexShader = vertexId; mFragmentShader = fragmentId; mInitialized = true; //获得着色器里面uniform变量的位置 mProjectionMatrixLoc = glGetUniformLocation(programId, "projection"); mTextureMatrixLoc = glGetUniformLocation(programId, "texture"); ... // set-up the default values for our uniforms glUseProgram(programId); glUniformMatrix4fv(mProjectionMatrixLoc, 1, GL_FALSE, mat4().asArray()); glEnableVertexAttribArray(0); } void Program::use() { // Program生效 glUseProgram(mProgram); } void Program::setUniforms(const Description& desc) { // TODO: we should have a mechanism here to not always reset uniforms that // didn't change for this program. // 根据uniform的位置,给uniform变量设置,设到shader里面 if (mSamplerLoc = 0) { glUniform1i(mSamplerLoc, 0); glUniformMatrix4fv(mTextureMatrixLoc, 1, GL_FALSE, desc.texture.getMatrix().asArray()); } ... glUniformMatrix4fv(mProjectionMatrixLoc, 1, GL_FALSE, desc.projectionMatrix.asArray()); } ATRACE_CALL(); if (!GLExtensions::getInstance().hasNativeFenceSync()) { return base::unique_fd(); } // 创建一个EGLSync对象,用来标识GPU是否绘制完 EGLSyncKHR sync = eglCreateSyncKHR(mEGLDisplay, EGL_SYNC_NATIVE_FENCE_ANDROID, nullptr); if (sync == EGL_NO_SYNC_KHR) { ALOGW("failed to create EGL native fence sync: %#x", eglGetError()); return base::unique_fd(); } // native fence fd will not be populated until flush() is done. // 将gl command命令全部刷给GPU glFlush(); // get the fence fd //获得android 使用的fence fd base::unique_fd fenceFd(eglDupNativeFenceFDANDROID(mEGLDisplay, sync)); eglDestroySyncKHR(mEGLDisplay, sync); if (fenceFd == EGL_NO_NATIVE_FENCE_FD_ANDROID) { ALOGW("failed to dup EGL native fence sync: %#x", eglGetError()); } // Only trace if we have a valid fence, as current usage falls back to // calling finish() if the fence fd is invalid. if (CC_UNLIKELY(mTraceGpuCompletion && mFlushTracer) && fenceFd.get() = 0) { mFlushTracer-queueSync(eglCreateSyncKHR(mEGLDisplay, EGL_SYNC_FENCE_KHR, nullptr)); } return fenceFd; } ATRACE_CALL(); ALOGV(__FUNCTION__); if (!getState().isEnabled) { return; } // Repaint the framebuffer (if needed), getting the optional fence for when // the composition completes. auto optReadyFence = composeSurfaces(Region::INVALID_REGION, refreshArgs); if (!optReadyFence) { return; } // swap buffers (presentation) mRenderSurface-queueBuffer(std::move(*optReadyFence)); } 文件:frameworks/native/services/surfaceflinger/CompositionEngine/src/RenderSurface.cpp void RenderSurface::queueBuffer(base::unique_fd readyFence) { auto& state = mDisplay.getState(); ... if (mGraphicBuffer == nullptr) { ALOGE("No buffer is ready for display [%s]", mDisplay.getName().c_str()); } else { status_t result = // mGraphicBuffer-getNativeBuffer() 是GPU输出的Buffer,可以理解为GPU将内容合成到该Buffer上 mNativeWindow-queueBuffer(mNativeWindow.get(), mGraphicBuffer-getNativeBuffer(), dup(readyFence)); if (result != NO_ERROR) { ALOGE("Error when queueing buffer for display [%s]: %d", mDisplay.getName().c_str(), result); // We risk blocking on dequeueBuffer if the primary display failed // to queue up its buffer, so crash here. if (!mDisplay.isVirtual()) { LOG_ALWAYS_FATAL("ANativeWindow::queueBuffer failed with error: %d", result); } else { mNativeWindow-cancelBuffer(mNativeWindow.get(), mGraphicBuffer-getNativeBuffer(), dup(readyFence)); } } mGraphicBuffer = nullptr; } } // 消费Buffer status_t result = mDisplaySurface-advanceFrame(); if (result != NO_ERROR) { ALOGE("[%s] failed pushing new frame to HWC: %d", mDisplay.getName().c_str(), result); } } 文件:frameworks/native/services/surfaceflinger/DisplayHardware/FramebufferSurface.cpp status_t FramebufferSurface::advanceFrame() { uint32_t slot = 0; sp ALOGE("error latching next FramebufferSurface buffer: %s (%d)", strerror(-result), result); } return result; } status_t FramebufferSurface::nextBuffer(uint32_t& outSlot, sp Mutex::Autolock lock(mMutex); BufferItem item; // acquire Buffer status_t err = acquireBufferLocked(&item, 0); ... if (mCurrentBufferSlot != BufferQueue::INVALID_BUFFER_SLOT && item.mSlot != mCurrentBufferSlot) { mHasPendingRelease = true; mPreviousBufferSlot = mCurrentBufferSlot; mPreviousBuffer = mCurrentBuffer; } //更新当前的Buffer和fence信息 mCurrentBufferSlot = item.mSlot; mCurrentBuffer = mSlots[mCurrentBufferSlot].mGraphicBuffer; mCurrentFence = item.mFence; outFence = item.mFence; mHwcBufferCache.getHwcBuffer(mCurrentBufferSlot, mCurrentBuffer, &outSlot, &outBuffer); outDataspace = static_cast ALOGE("error posting framebuffer: %d", result); return result; } return NO_ERROR; } ATRACE_CALL(); ALOGV(__FUNCTION__); ... auto frame = presentAndGetFrameFences(); mRenderSurface-onPresentDisplayCompleted(); ... } 文件:frameworks/native/services/surfaceflinger/DisplayHardware/HWComposer.cpp status_t HWComposer::presentAndGetReleaseFences(DisplayId displayId) { ATRACE_CALL(); RETURN_IF_INVALID_DISPLAY(displayId, BAD_INDEX); auto& displayData = mDisplayData[displayId]; auto& hwcDisplay = displayData.hwcDisplay; ... // GPU合成时执行present,返回present fence auto error = hwcDisplay-present(&displayData.lastPresentFence); RETURN_IF_HWC_ERROR_FOR("present", error, displayId, UNKNOWN_ERROR); std::unordered_map if (mHasPendingRelease) { sp // 更新BufferSlot的 fence status_t result = addReleaseFence(mPreviousBufferSlot, mPreviousBuffer, fence); ALOGE_IF(result != NO_ERROR, "onFrameCommitted: failed to add the" " fence: %s (%d)", strerror(-result), result); } // 释放之前的Buffer status_t result = releaseBufferLocked(mPreviousBufferSlot, mPreviousBuffer); ALOGE_IF(result != NO_ERROR, "onFrameCommitted: error releasing buffer:" " %s (%d)", strerror(-result), result); mPreviousBuffer.clear(); mHasPendingRelease = false; } }

-